Automate with n8n, MCP, and Ollama: Revolutionize Local Language Models

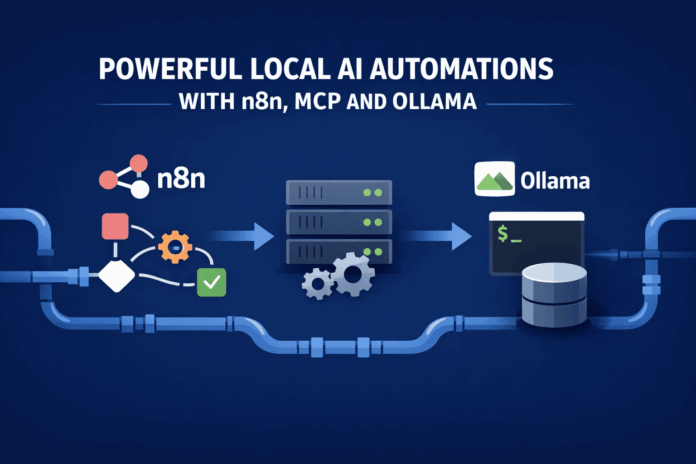

Running large language models (LLMs) locallly can drive significant automation in tasks traditionally requiring engineering intervention. By integrating tools like n8n, the Model Context Protocol (MCP), and Ollama, organizations can streamline operations efficiently on a single workstation or small server, replacing fragile scripts and costly APIs.

Key applications include automated log triage, continuous data quality monitoring, and autonomous dataset labeling, where n8n orchestrates, MCP constrains tool use, and Ollama handles local reasoning. This setup enhances operational speed while ensuring data privacy and security. Additionally, it enables self-updating research briefs and incident postmortems, emphasizing structured, auditable outputs.

With n8n automating the workflows and maintaining stringent control over inference, the entire stack becomes a practical solution for businesses looking to optimize performance and reduce reliance on external services. In summary, the combination of n8n, MCP, and Ollama creates a powerful, localized automation ecosystem.