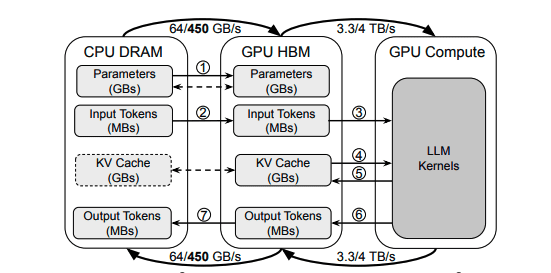

Researchers at The University of Texas at Austin have developed MIRAGE, an innovative solution to address inference speed bottlenecks in large language models (LLMs) caused by escalating memory demands. Traditional KV caching techniques struggle as model sizes grow, consuming substantial GPU memory and leading to inefficiencies due to frequent data transfer between CPU and GPU. MIRAGE optimizes this by dynamically reallocating memory initially designated for model parameters to enhance KV cache storage without the constant need for exchanging data. This process minimizes synchronization overhead, significantly improving latency and throughput in multi-tenant environments, where inactive models’ memory can be reclaimed for active use. Experimental results show MIRAGE achieving up to 82.5% lower tail latency and up to 86.7% higher throughput compared to existing methods. With its capacity for real-time adjustments based on workload, MIRAGE promises a more efficient and scalable framework for LLM inference, paving the way for advanced AI applications.

Source link