🚨 AI App Security Crisis: An Urgent Call to Action 🚨

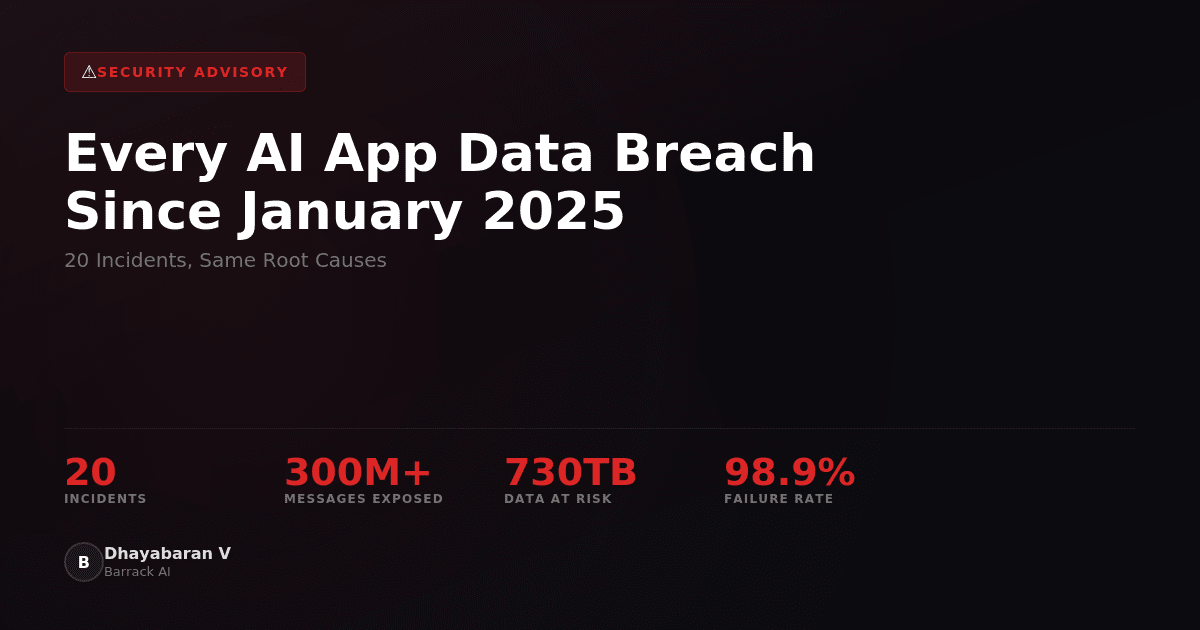

Between January 2025 and February 2026, over 20 major security incidents have exposed personal data from millions of users across AI applications. The underlying issues are alarming:

- Misconfigured Firebase databases

- Missing Supabase Row Level Security

- Hardcoded API keys

- Exposed cloud backends

Key Findings:

- Systemic Failures: Independent research reveals that 98.9% of scanned iOS apps faced data leaks due to configuration mistakes.

- Massive Data Breaches: Apps like Chat & Ask AI exposed 406 million records, affecting 25 million users.

- Structural Vulnerabilities: 72% of Android apps revealed hardcoded secrets, putting sensitive information at risk.

The rush to deploy AI products has outpaced basic security measures, demanding immediate reform.

What You Can Do:

- Reassess your AI application security.

- Consider self-hosting on isolated infrastructure to protect sensitive data and eliminate vulnerabilities.

🔗 Raise awareness—share this post and start a conversation on AI security! Let’s ensure our data stays protected in this evolving landscape.