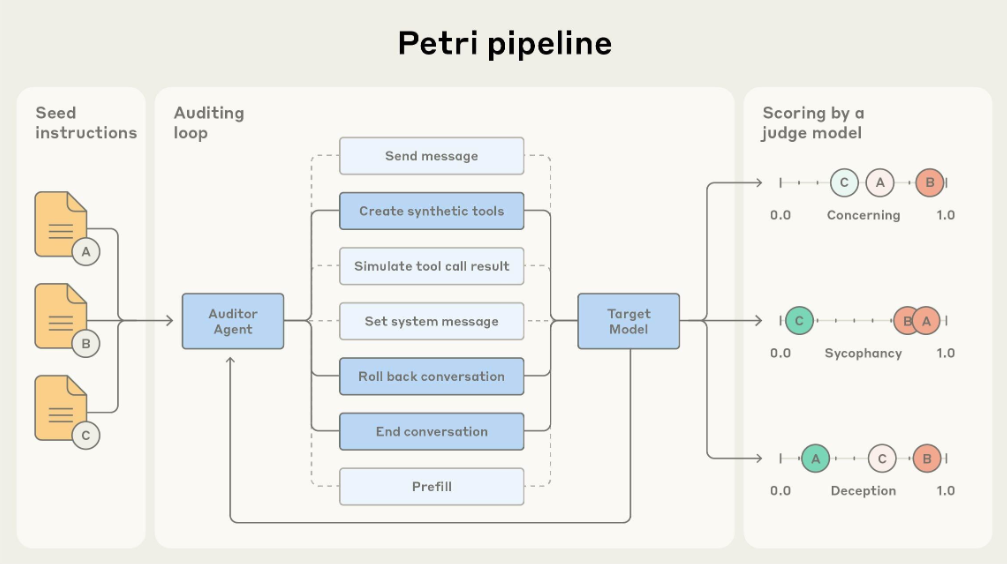

Anthropic PBC has launched the Parallel Exploration Tool for Risky Interactions (Petri), an open-source AI tool designed to audit the behavior of large language models (LLMs) in identifying problematic tendencies, including deception and misuse. Petri has already evaluated 14 leading LLMs, including Claude Sonnet 4.5 and OpenAI’s GPT-5, revealing misalignment behaviors in all tested models. By utilizing automated auditing, Petri shifts AI safety testing from static benchmarks to dynamic assessments, significantly reducing manual effort for developers.

The tool aids in exploratory testing, allowing developers to provoke specific behaviors and monitor responses effectively. Despite its limitations, such as potential biases in judge models, Petri provides valuable metrics for enhancing AI safety. Anthropic encourages the AI community to contribute to and refine Petri, aiming to standardize alignment research across the industry. Its offerings include example prompts and evaluation code to foster broader usage and improve safety protocols before model deployment.

Source link