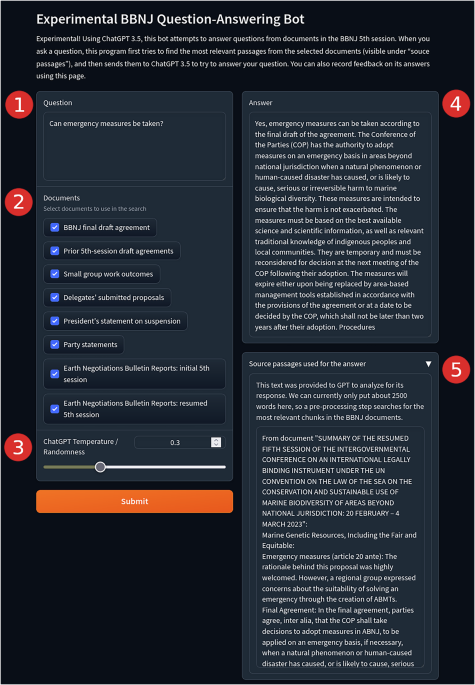

This analysis examines the implications of Large Language Models (LLMs) on equity within marine policymaking, particularly for developing nations and marginalized groups. It critiques the BBNJ Question-Answering Bot’s biases, revealing how AI models like GPT potentially reinforce existing inequities through their training data, which predominantly reflects developed nations’ perspectives. The study emphasizes that over-reliance on AI can mislead policymakers and hinder essential capacity-building efforts, as marginalized states may lack the resources to effectively engage with AI tools. Our findings suggest these biases stem from both the foundational models and the design of LLM applications, risking further alienation of developing states in international discourses. Despite these challenges, LLMs could serve as valuable tools for democratizing access to marine policy information, offering tailored support to under-resourced governments seeking to navigate complex legal frameworks. The overall potential for AI to assist in maritime governance hinges on addressing its inherent biases and enhancing the capacity of developing countries to leverage these technologies effectively.

Source link