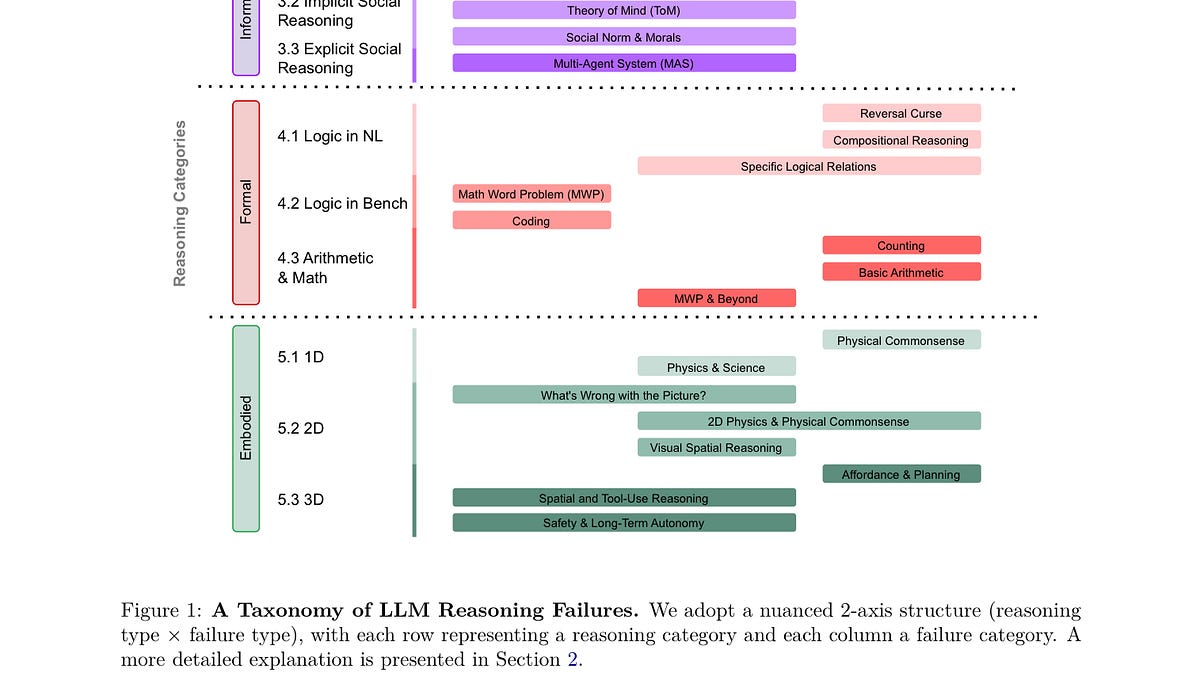

Recent analysis reveals critical flaws in Large Language Models (LLMs) related to their reasoning capabilities. Despite advancements in artificial intelligence, Marcus emphasizes that LLMs struggle with logical consistency and understanding context. This limitation compromises their ability to produce reliable and accurate outputs, especially in complex scenarios. As industries increasingly adopt AI to enhance decision-making and automate processes, the inherent reasoning deficiencies of LLMs pose significant risks. Marcus calls for a reevaluation of how these models are utilized, advocating for more robust frameworks that emphasize transparency and reliability. The article highlights the importance of ongoing research to improve LLM performance, urging developers to focus on enhancing cognitive functions rather than merely expanding data ingestion. As businesses rely more on AI technologies, recognizing and addressing these flaws is crucial for ensuring that LLMs can effectively support human reasoning rather than undermine it. Future developments must prioritize refining these core issues to harness AI’s full potential responsibly.

Source link