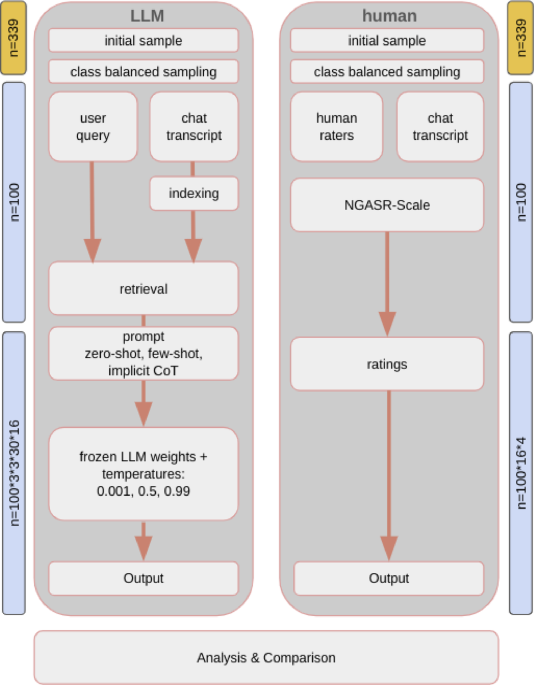

The study aimed to evaluate suicide risk using chat transcripts from krisenchat, a German youth crisis text line. Over 100 selected cases were assessed by four expert raters using the Nurses’ Global Assessment of Suicide Risk (NGASR) scale and compared to ratings generated by a large language model (LLM). The analysis involved stratified random sampling to ensure balanced representation across risk levels. Each transcript was rated for 16 items, with the LLM generating multiple scores through varying prompting styles and temperature settings. Statistical analysis included inter-rater reliability, observer agreement, and classification metrics, assessing the effectiveness of LLM ratings against human judgments. The findings highlighted high observer agreement and indicated that LLM results can complement traditional risk assessments. Ethical considerations followed the Declaration of Helsinki, with data anonymization and proper consent protocols in place. This research aligns with CONSORT-AI and TRIPOD-AI guidelines, contributing valuable insights into AI’s potential in mental health assessments.

Source link