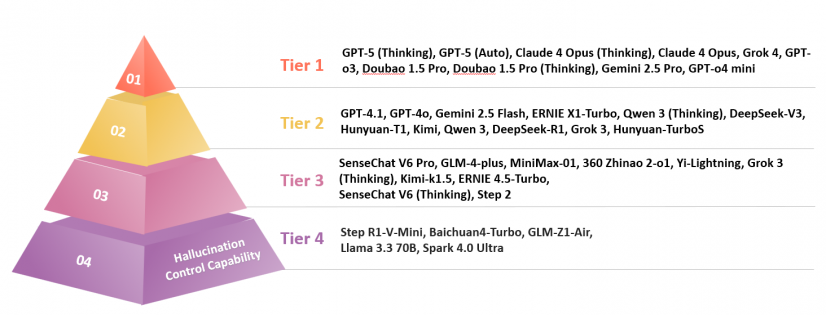

The HKU Business School has published the “Large Language Model (LLM) Hallucination Control Capability Evaluation Report,” assessing how various AI LLMs manage “hallucinations,” which occur when outputs diverge from factual accuracy or contextual relevance. Conducted by the Artificial Intelligence Evaluation Laboratory under Professor Jack Jiang, this study evaluated 37 models, revealing that GPT-5 (Thinking) and GPT-5 (Auto) ranked highest in managing hallucinations. The report highlights that, while many models excel in maintaining instruction fidelity, they struggle with factual accuracy. Notably, ByteDance’s Doubao 1.5 Pro series performed well among Chinese LLMs but still lags behind top international models. The evaluation categorized hallucinations into factual and faithful types and found reasoning models like Claude 4 Opus to be more adept at preventing inaccuracies. The study underscores the need for ongoing enhancements to ensure AI models deliver credible and reliable content in professional applications. For the full report, visit HKU’s AI model rankings.

Source link