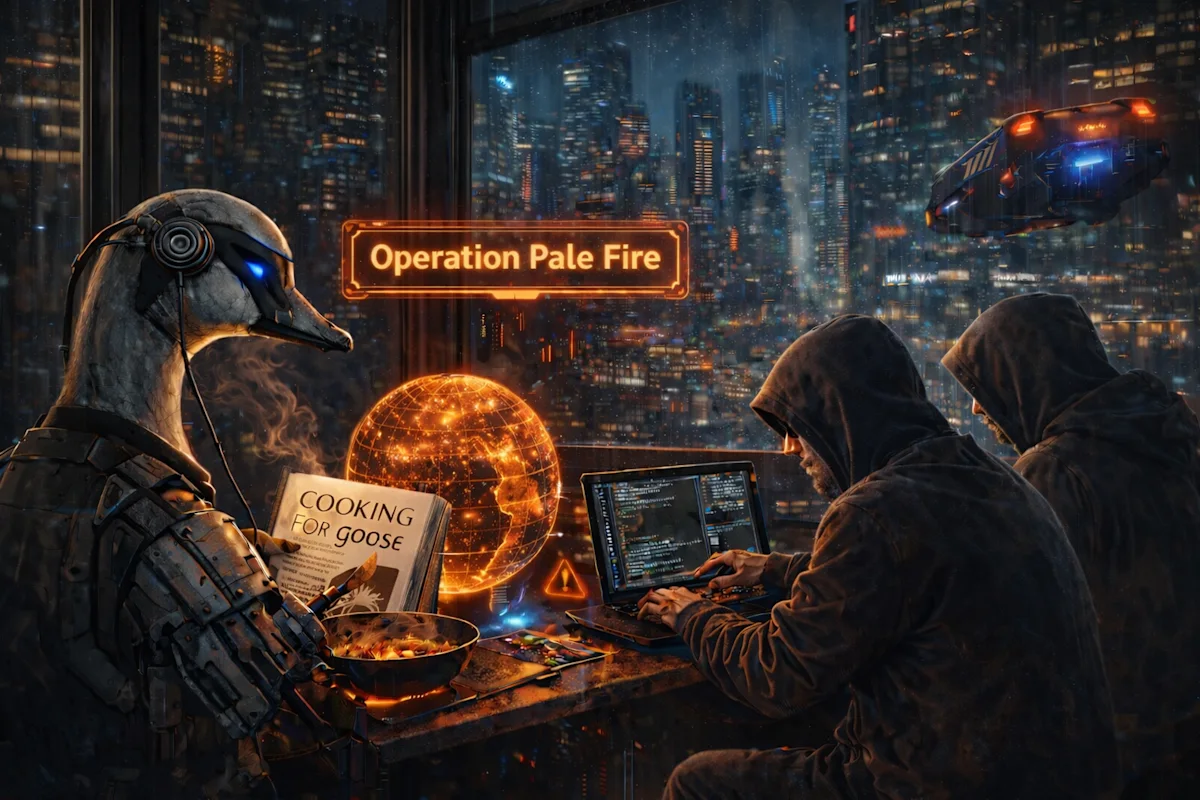

Enhancing AI Security: Lessons from Operation Pale Fire

At Block, we recognize that proactive security measures are essential in safeguarding AI systems. Our groundbreaking project, Operation Pale Fire, uncovered valuable insights on how AI agents like our open-source tool, goose, can be weaponized.

Key Highlights:

- Red Team Engagement: Explored potential misuse of goose through prompt injection and social engineering.

- AI Attack Simulation: Successfully compromised a Block employee’s laptop to test our defenses, revealing weaknesses.

- Detection and Response: DART effectively contained the attack, refining our security protocols along the way.

Lessons Learned:

- Treat AI output as untrusted user input.

- Implement robust behavioral monitoring.

- Design incident response plans specifically for AI breaches.

As the AI landscape evolves, embracing these principles will help organizations build secure and resilient systems.

🔗 Join the conversation! Share your thoughts on AI security practices and let’s pave the way for safer AI innovations together.