Revolutionizing Long Context Processing with DeepSeek-OCR

Large Language Models (LLMs) face challenges when processing extensive contexts, leading to inefficiencies in quality and resource consumption. Enter DeepSeek-OCR—an innovative open-source model designed to compress long contexts effectively. Here’s why it matters:

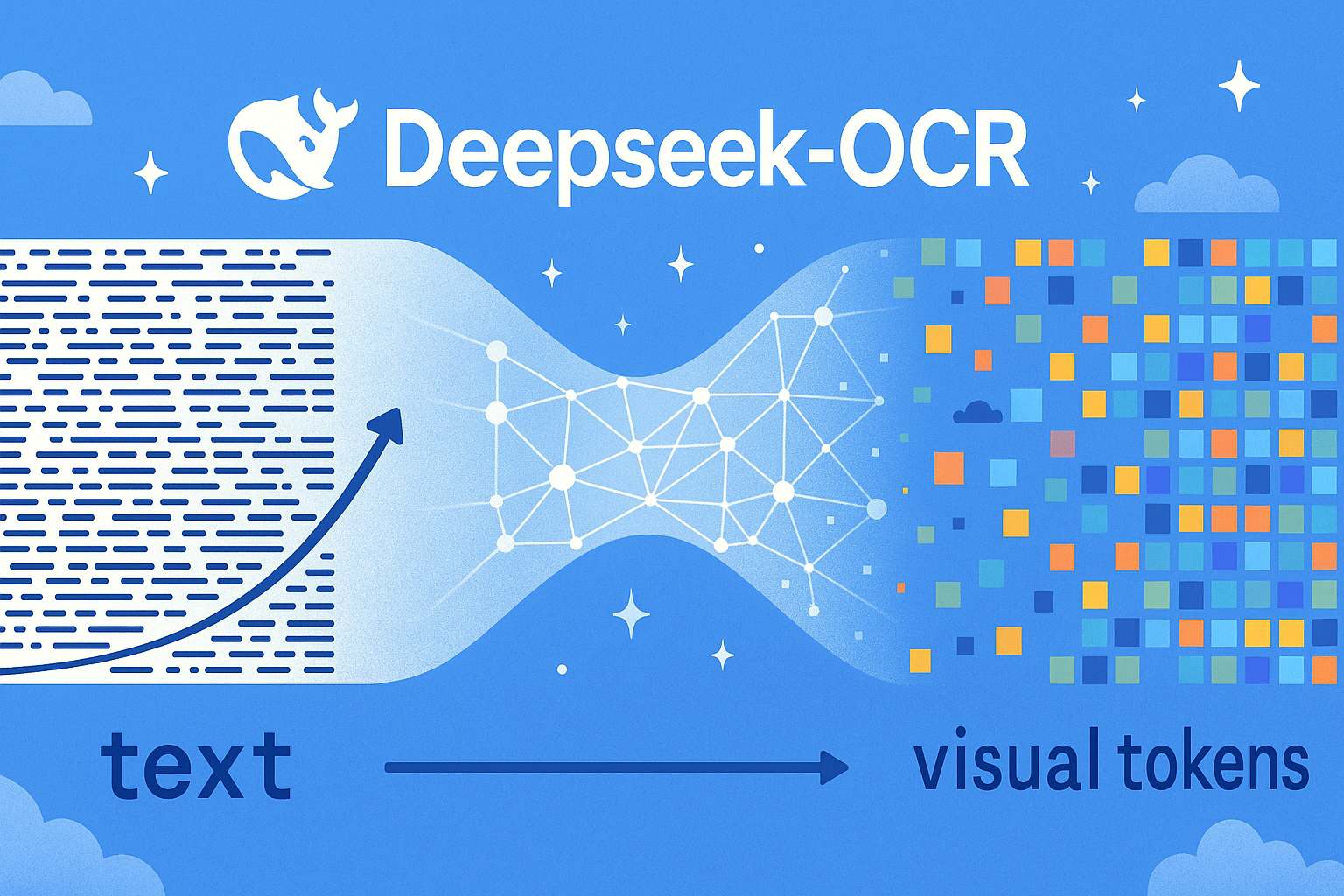

- Optical Compression: Converts text into images, treating them as visual tokens. One image can hold as much information as thousands of text tokens.

- High Efficiency: Reduces computational costs dramatically—up to 60 times efficiency improvements.

- Enhanced Accuracy: Maintains over 97% recognition accuracy during text reconstruction, preserving layout and semantic meaning.

DeepSeek-OCR integrates core components for refined processing:

- DeepEncoder: Compresses via high-ratio visual tokens.

- MoE Decoder: Efficiently reconstructs content, enabling structured data outputs.

This paradigm shift not only enhances LLM performance but also paves the way for advancements in retrieval-augmented generation (RAG) systems.

👉 Explore the future of AI and share your thoughts on this transformative technology! #ArtificialIntelligence #DeepLearning #TechInnovation