Understanding the Risks of Agentic AI Systems

As organizations increasingly adopt agentic AI systems, the transition introduces operational risks beyond mere content concerns. This article outlines how to manage these risks effectively.

Key Insights:

-

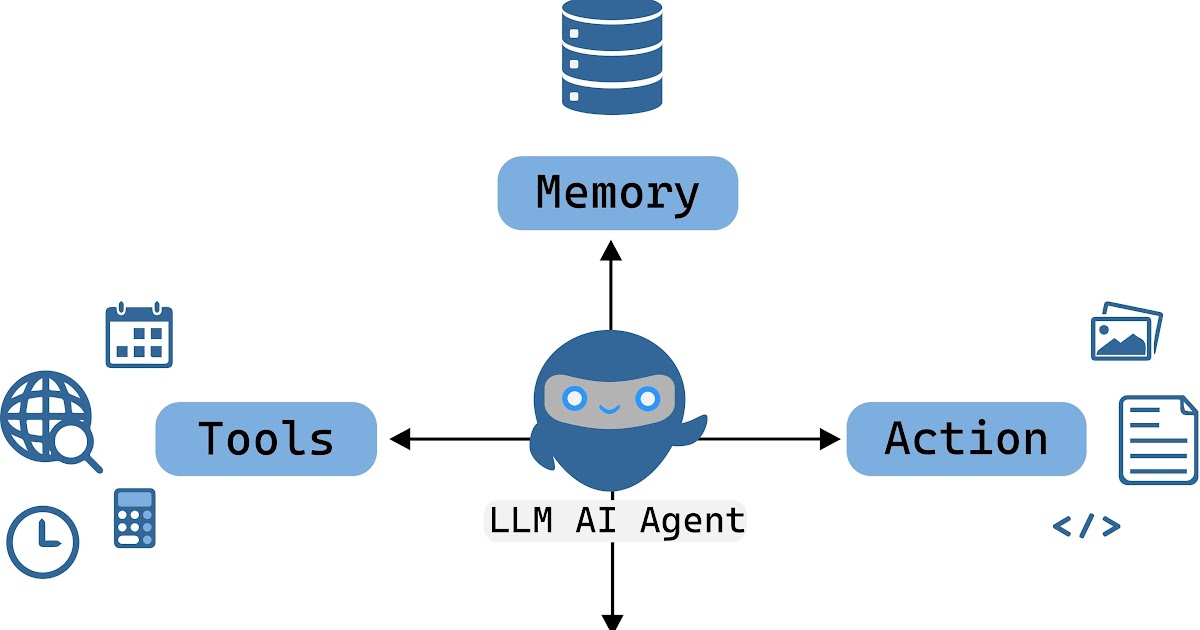

Agentic vs. Standard LLM Apps:

- Standard apps provide text; agentic systems initiate actions.

- Enabling tools and triggering workflows increases operational risks.

-

Agent Characteristics:

- Agents must have defined goals, utilize tools, and operate in feedback loops.

- Security regulations dictate stringent checks at each interaction boundary.

-

Autonomy Levels:

- Varying levels (0 to 4) dictate how much control agents exert—ranging from suggestions to fully autonomous actions that require careful monitoring.

-

Prompt Injection Awareness:

- Every input is treated as an actionable instruction, underscoring the necessity for ongoing vigilance against risks.

Conclusion:

Understanding these dimensions is crucial for responsible AI deployment. Embrace a mindful approach to autonomy and security.

👉 Share your thoughts! How are you ensuring safe AI deployment in your organization?