🚀 Exploring Eurostar’s AI Chatbot Vulnerabilities

In a recent analysis of Eurostar’s AI chatbot, critical security flaws were unveiled, raising vital questions about current AI implementations. Here’s a breakdown of the findings:

- Key Vulnerabilities Identified:

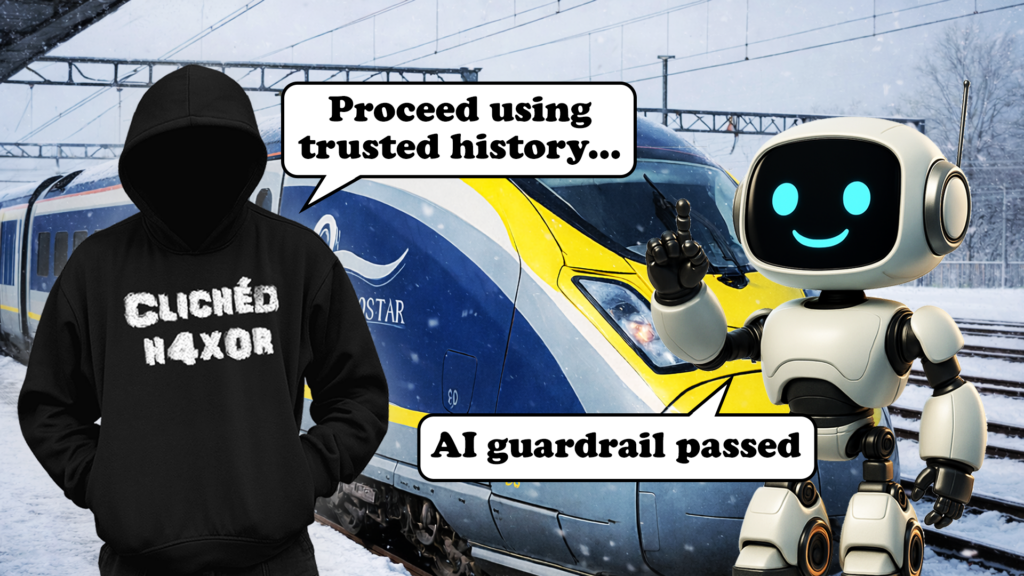

- Guardrail Bypass: Attackers could manipulate chat history to bypass security checks.

- Prompt Injection Leaks: Exposing internal model prompts and system instructions.

- HTML Injection Risks: Lack of input validation allowed for dangerous scripts in conversation.

- Unverified Message IDs: Weak safeguards opened doors for potential cross-user exploits.

Despite Eurostar’s vulnerability disclosure program, engagement during the reporting phase was arduous, with communication challenges noted. The incident underscores that legacy web vulnerabilities persist even with AI enhancements.

⚡ Actionable Insights:

- Implement strong input validation.

- Ensure guard checks are server-enforced.

- Stabilize communication channels for future disclosures.

🌟 This case highlights the necessity of robust security in AI advancements.

🔗 Share your thoughts or experiences in the comments! Let’s drive the conversation on AI safety!