Unlocking AI-Assisted Security: A Comparative Study of Models Against Vulnerabilities

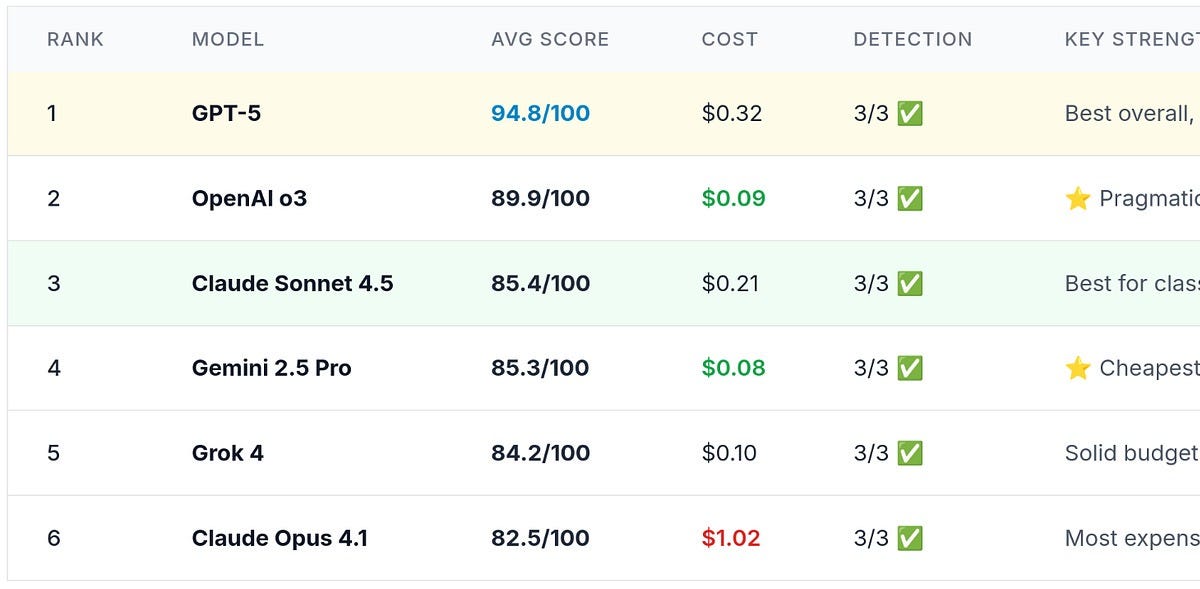

In an era where AI dominates tech, our latest study tested six advanced AI models — GPT-5, OpenAI o3, Claude, Gemini, and Grok — against three critical security vulnerabilities. The findings are pivotal for developers focused on security auditing.

Key Findings:

- 100% Detection Rate: All models identified every vulnerability.

- Quality Varied by Model:

- GPT-5: 94.8/100, best for comprehensive security measures.

- OpenAI o3: 89.9/100, pragmatic and production-ready.

- Claude Sonnet 4.5: 90% of GPT-5’s quality at a lower cost.

- Gemini 2.5 Pro: Budget-friendly, offering 75% lower costs with decent efficacy.

Insights:

- Cost vs. Quality Trade-off: Choose based on your security needs:

- For mission-critical systems: Opt for GPT-5 or o3.

- For routine checks: Consider Claude or Gemini.

Dive deeper into the analysis and discover which model fits your project.

🔗 Engage with us! Share your thoughts or experiences on AI security audits in the comments below!