Optimize Your Embedding Model with Vespa

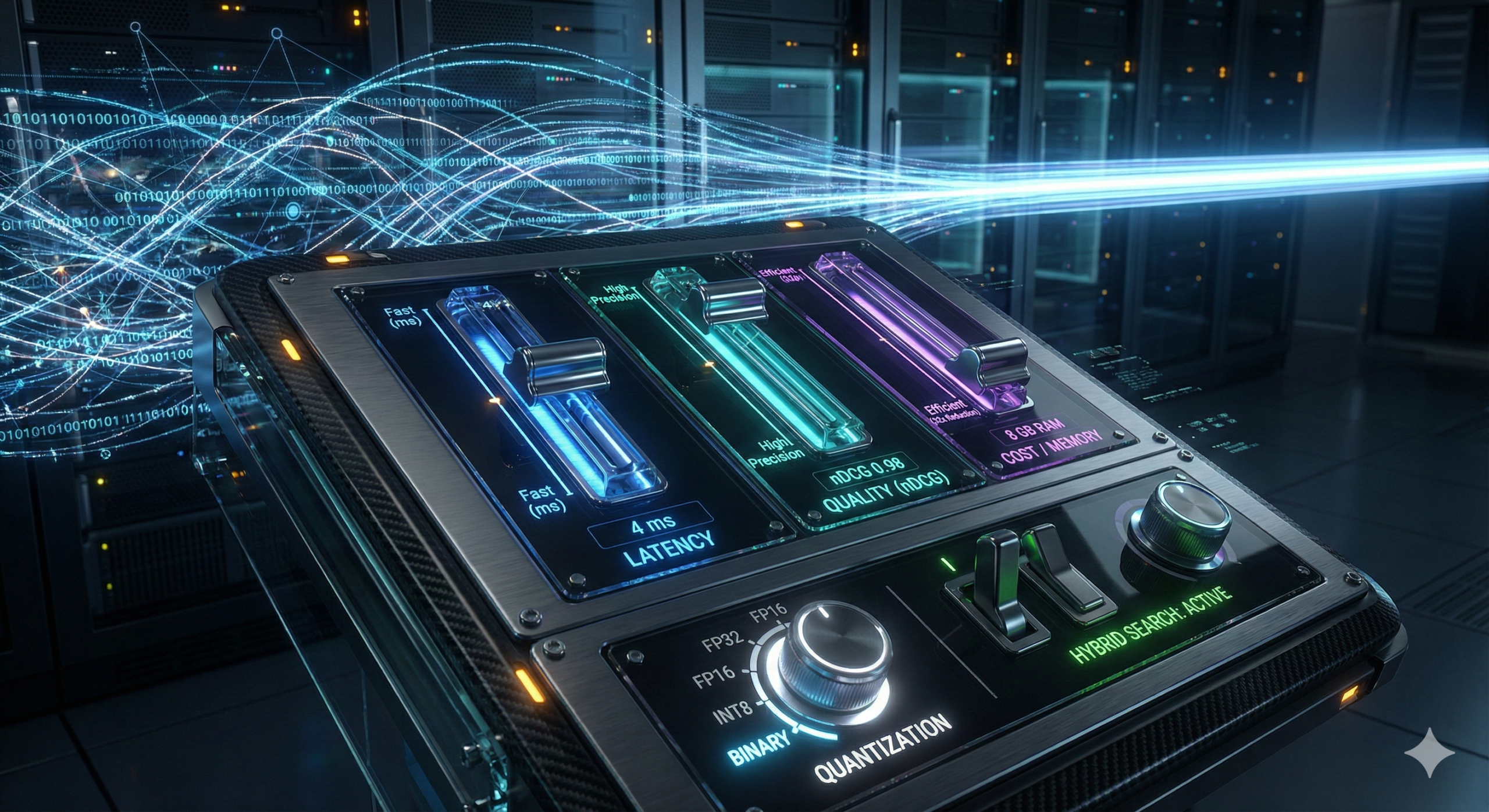

Selecting the right embedding model is crucial for anyone using Vespa. Our latest findings reveal significant trade-offs that can enhance your hybrid search capabilities. Here’s what we discovered:

- Ranking Models: Focus on models under 500M parameters that are open-source, and have ONNX weights available.

- Inference Speed: Achieve up to 4x faster query performance through intelligent model quantization:

- INT8 models deliver remarkable speed boosts on CPU.

- FP16 distinctions outperform INT8 on GPU.

Key Findings:

- Storage & Precision: Binary vectors can reduce memory usage by 32x while retaining up to 98% quality.

- Hybrid Retrieval: Combining BM25 with vector rankings yields an average quality boost of 3-5%.

For those seeking a deeper understanding, our interactive leaderboard showcases comprehensive benchmarks.

✨ Join the Vespa community on Slack to share ideas and stay updated on the latest enhancements! Share your thoughts and experiences below! 👇