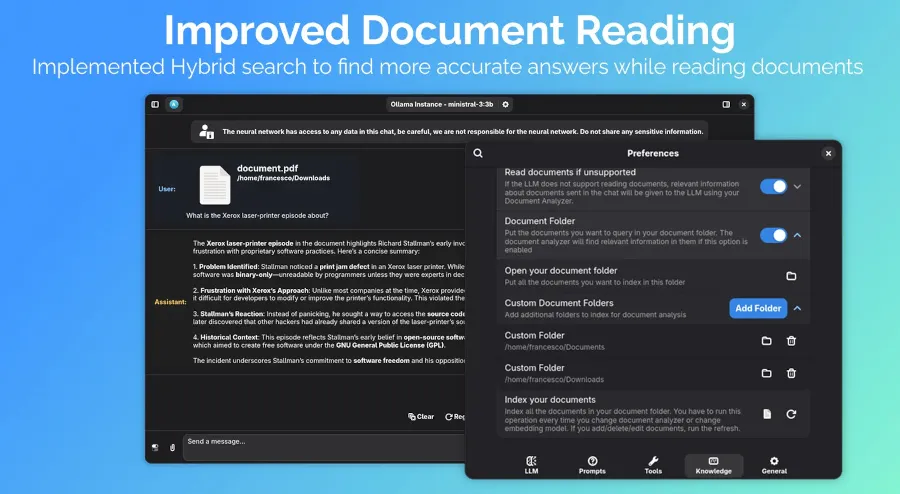

Newelle, the innovative virtual AI assistant for the GNOME desktop, has launched its latest version, Newelle 1.2. This update enhances AI integration with compatibility for Google Gemini, OpenAI, Groq, and local LLMs. Key features include Llama.cpp integration, which supports various back-ends from CPUs to GPU-specific options, including Vulkan. A new model library facilitates the use of ollama and llama.cpp. For enhanced document engagement, Newelle now offers a hybrid search feature that improves document reading on your filesystem. Additionally, a new command execution tool has been introduced, allowing AI to run commands on local systems, which may raise some concerns. Other improvements in this release include tool groups, better MCP server handling, a semantic memory handler, and chat import/export functionality. Users can find more details about this essential AI assistant for GNOME in “This Week in GNOME,” and Newelle 1.2 is available for download on Flathub.

Source link