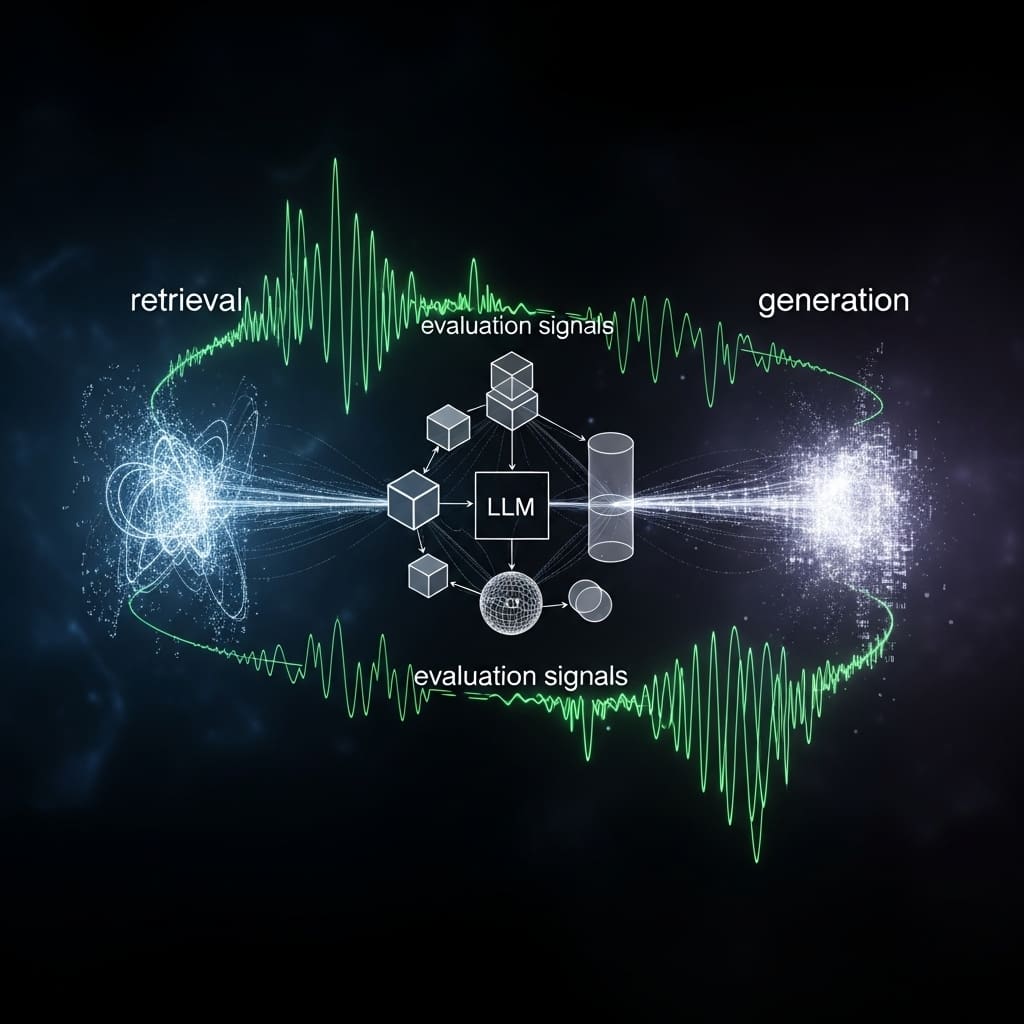

Researchers have developed Grounding Utility (GroGU), a groundbreaking metric for assessing the content utility in Retrieval-Augmented Generation (RAG) systems by measuring language models’ (LLMs) confidence via entropy. Unlike traditional methods that require costly human annotations, GroGU offers a model-specific evaluation that effectively distinguishes relevant from irrelevant documents. By defining utility as a function of LLM confidence, GroGU captures nuances often overlooked by LLM-agnostic metrics. This innovative approach has led to substantial performance improvements, with gains of up to 18.2 points in Mean Reciprocal Rank and 9.4 points in answer accuracy when applied in query-rewriter training using Direct Preference Optimization. The study emphasizes the inadequacy of existing relevance scores and highlights GroGU’s potential to optimize RAG systems without manual intervention. This breakthrough paves the way for enhanced document utility assessment and broader applications in fields where labeled data are scarce, ultimately promoting scalable and adaptable RAG solutions.

Source link