Unleash the Power of Context with Context Lens 🚀

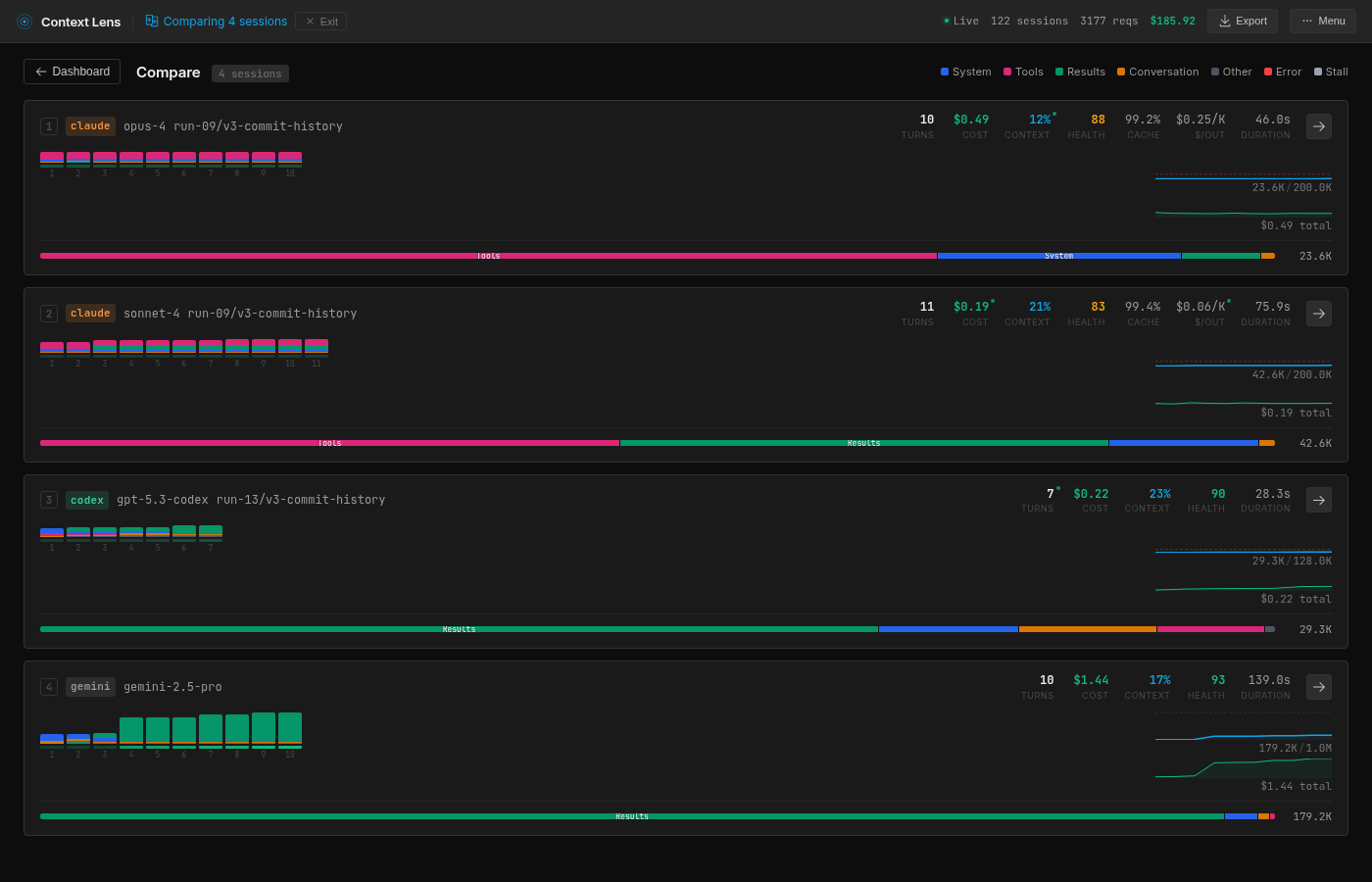

Last week, I compared Claude and Gemini, and the results were surprising! I developed Context Lens, a tool that analyzes how different language models manage their context. The outcome? Each model tackles the same problem in unique ways!

Key Insights:

- Token Usage Matters: Tokens dictate how much these models remember. More tokens mean higher costs.

- Diverse Strategies:

- Opus efficiently uses git history but has a high tool definition cost.

- Sonnet reads comprehensively, balancing reads and results, but at a token expense.

- Codex excels with low-level commands; it’s precise and quick!

- Gemini, while context-hungry, retrieves expansive data, leading to variable performance.

Why It Matters:

Understanding how these models operate can help us refine our approaches to AI tasks.

🔍 Try Context Lens and explore real-time breakdowns of your LLM APIs! It’s open-source and ready for action.

👉 Share your thoughts or experiences below, and let’s discuss the future of AI!