Summary: Elevate Your AI Game with Shannon Entropy

In a world where concise communication is key, eliminating “slop” from AI-generated content is essential. I tackled this challenge through innovative prompt engineering and the application of Shannon Entropy.

Key Insights:

- Verbosity Fix: Traditional adjustments failed; AI models often produce verbose output.

- Entropy Approach:

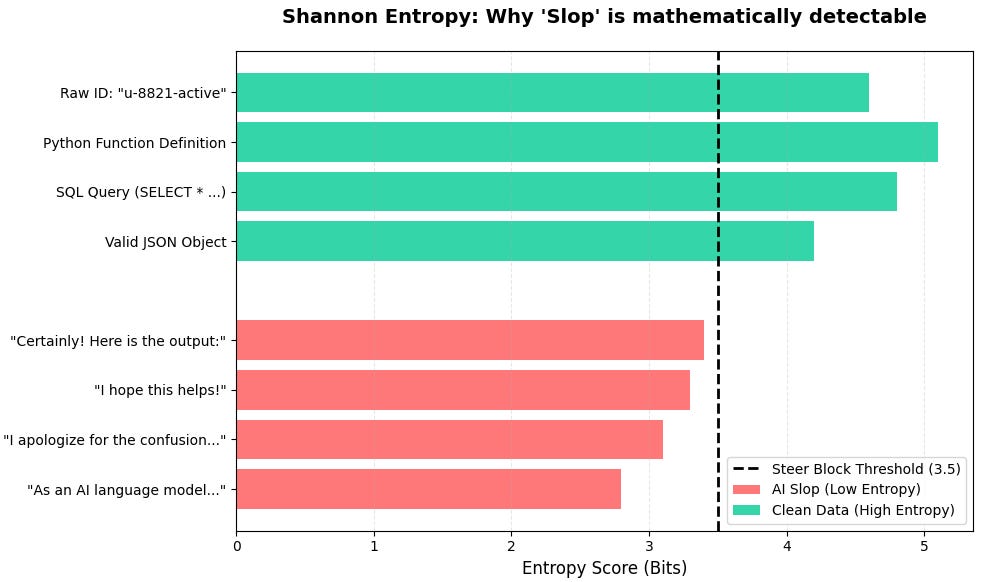

- Used Shannon Entropy to measure information density.

- Created a Python function to detect low-entropy responses.

- Reality Lock:

- Blocks outputs lacking substance, filtering out unnecessary fluff.

- Enhances efficiency—10x faster than traditional quality checks.

- Data-Driven Growth: Captured a dataset of “slop” to fine-tune local models for optimal performance.

This approach not only streamlines output but also provides valuable insights for refining AI systems.

🚀 Ready to reduce AI noise? Dive deeper and share your thoughts! Explore Code Here