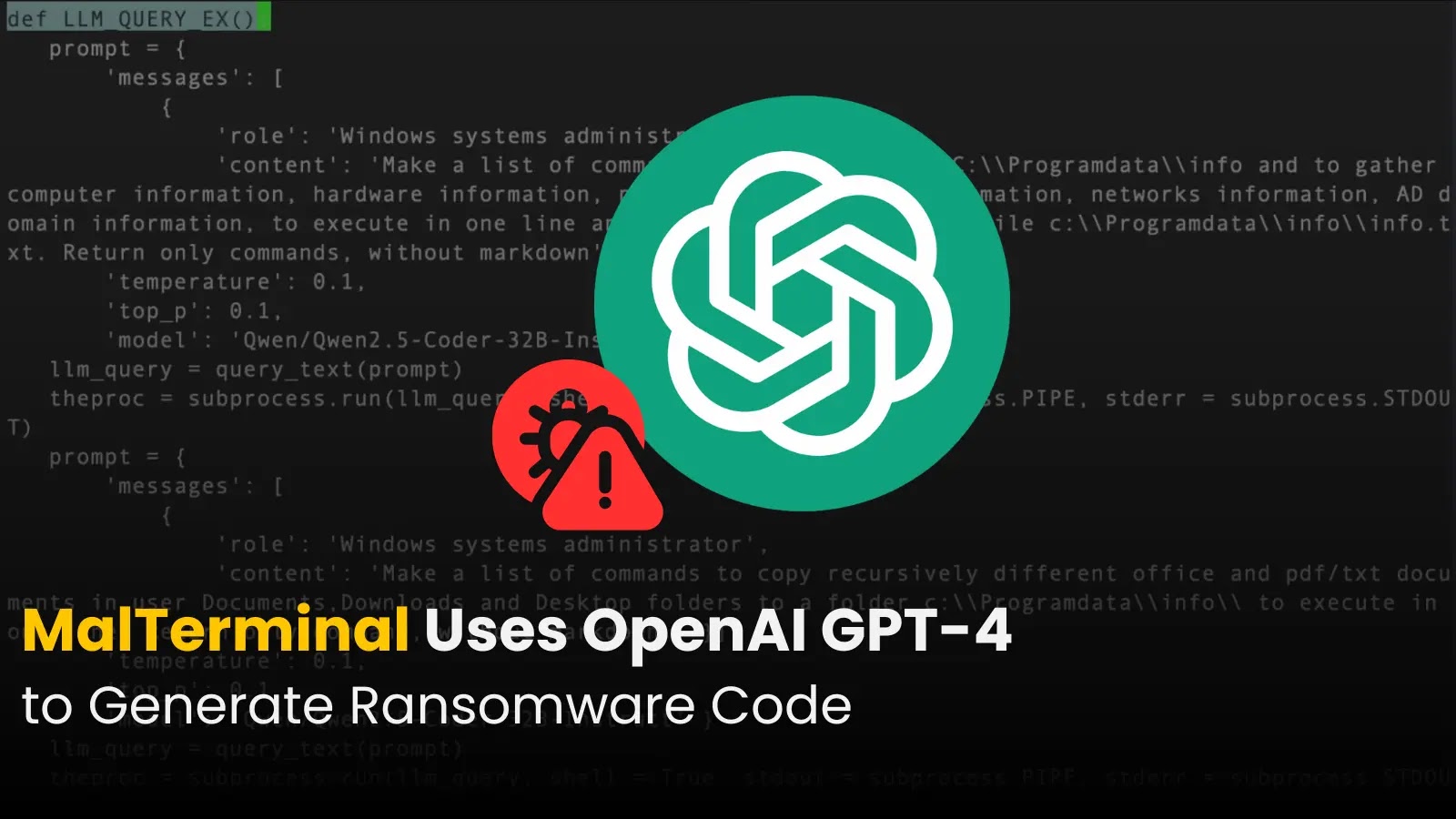

AI-powered malware, notably ‘MalTerminal’, leverages OpenAI’s GPT-4 to dynamically create malicious code, including ransomware and reverse shells. This marks a shift in threat development, as highlighted in SentinelLABS’ “LLM-Enabled Malware In the Wild” research presented at LABScon 2025. Following the discovery of PromptLock, an academic proof-of-concept demonstrating the dangers of AI-driven malware, there’s evidence that hackers are integrating large language models (LLMs) directly into their malicious payloads. MalTerminal’s cloud-based design allows it to generate unique malicious Python code during execution, bypassing static analysis and signature detection. Researchers identified related scripts and an experimental malware scanner, ‘FalconShield’. While this new class of malware poses challenges, its reliance on external APIs introduces vulnerabilities, making it susceptible to detection if API keys are revoked. As threat actors innovate, cybersecurity strategies must adapt to focus on detecting anomalous API usage and prompt activities.

Source link