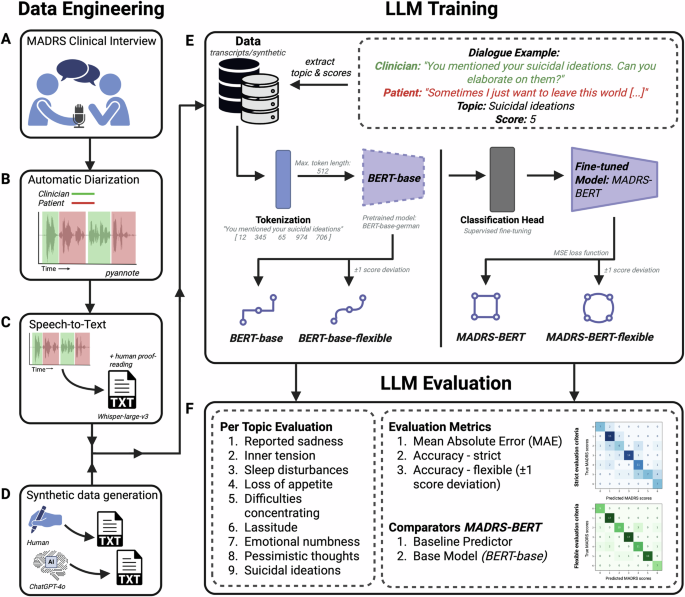

This study investigates a BERT-based language model, MADRS-BERT, fine-tuned on German MADRS interview data for predicting depression severity. The model significantly improved prediction accuracy, achieving an average Mean Absolute Error (MAE) between 0.7 and 1.0, and a 75.38% error reduction compared to baseline predictors. Unlike prior research focused on overall MADRS scores or binary classifications, MADRS-BERT provides detailed, item-level predictions, which are vital for clinical assessments. Its explicit fine-tuning aligns with psychological frameworks, yielding clinically interpretable outputs. Despite limitations, such as handling scoring discrepancies and potential bias in training data, the study emphasizes the model’s scalability and interpretability for real-world applications in mental health. By integrating AI solutions within structured frameworks like MADRS, the research addresses the need for transparent, reliable tools in psychiatry, enhancing diagnostic assessments while prioritizing ethical considerations around data use and patient privacy. This innovative approach showcases the potential of LLMs in clinical settings.

Source link