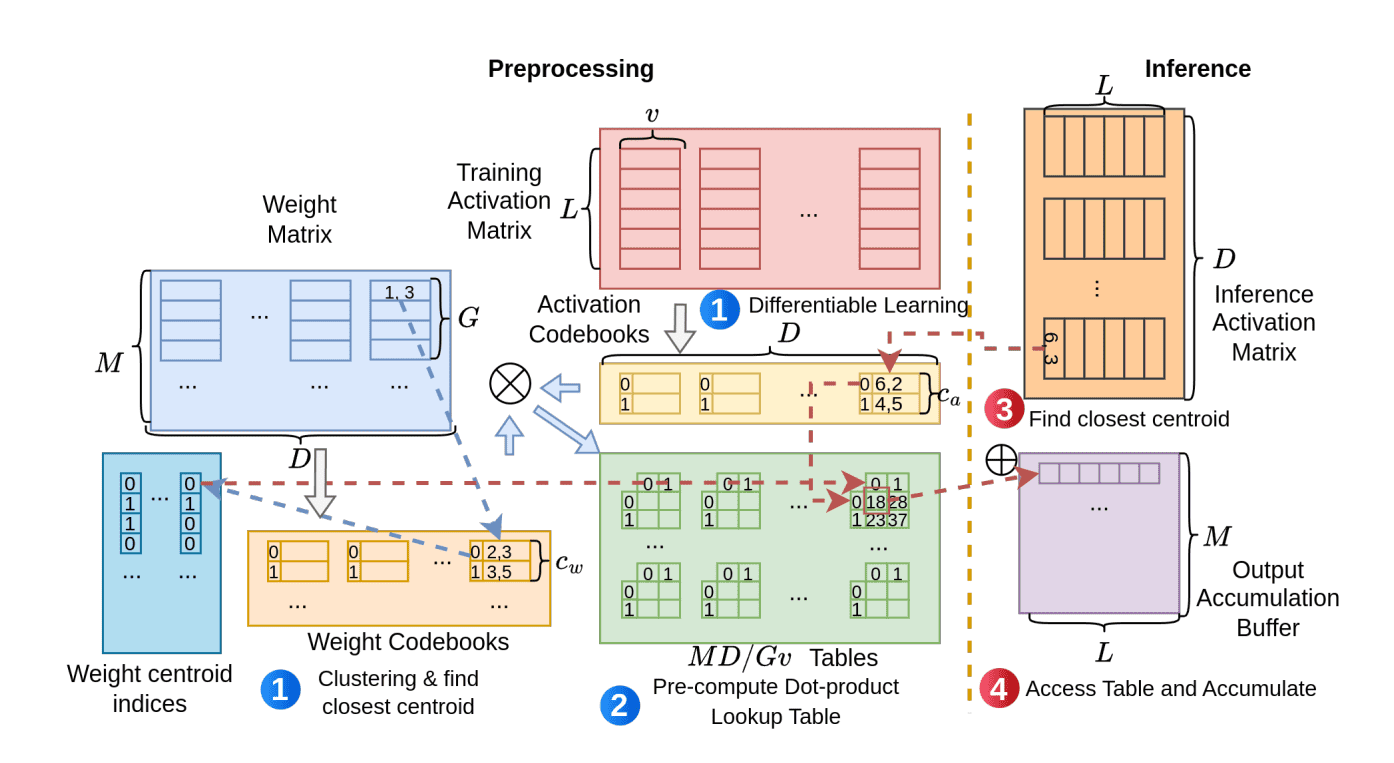

Large language models (LLMs) are transformative but face efficiency challenges on everyday devices. Researchers, including Zifan He and Yang Wang from Microsoft Research, introduced LUT-LLM, the first FPGA accelerator capable of efficiently running LLMs with over one billion parameters. This innovative design shifts computation from complex arithmetic to rapid memory access via table lookups, significantly enhancing performance and energy efficiency. Implemented on a V80 FPGA with a customized Qwen-3 1.7B model, LUT-LLM achieved a 1.66x speedup and a 4.1x boost in energy efficiency over leading GPUs like AMD MI210 and NVIDIA A100. Key innovations include activation-weight co-quantization and a bandwidth-aware parallel centroid search, maximizing throughput while minimizing latency. By utilizing efficient 2D table lookups and a hybrid execution strategy, this advancement represents a substantial leap toward practical on-device AI, showcasing scalability for even larger models. Research continues to focus on optimization techniques such as quantization and hardware acceleration for enhanced model deployment.

Source link