Inference is transforming AI with agentic systems capable of multi-step reasoning and long-term memory, tackling complex tasks in software development, video generation, and deep research. This evolution necessitates a rethinking of infrastructure to meet increasing demands for compute, memory, and networking capabilities. NVIDIA’s SMART framework addresses these challenges by optimizing inference through a disaggregated infrastructure that enhances resource efficiency.

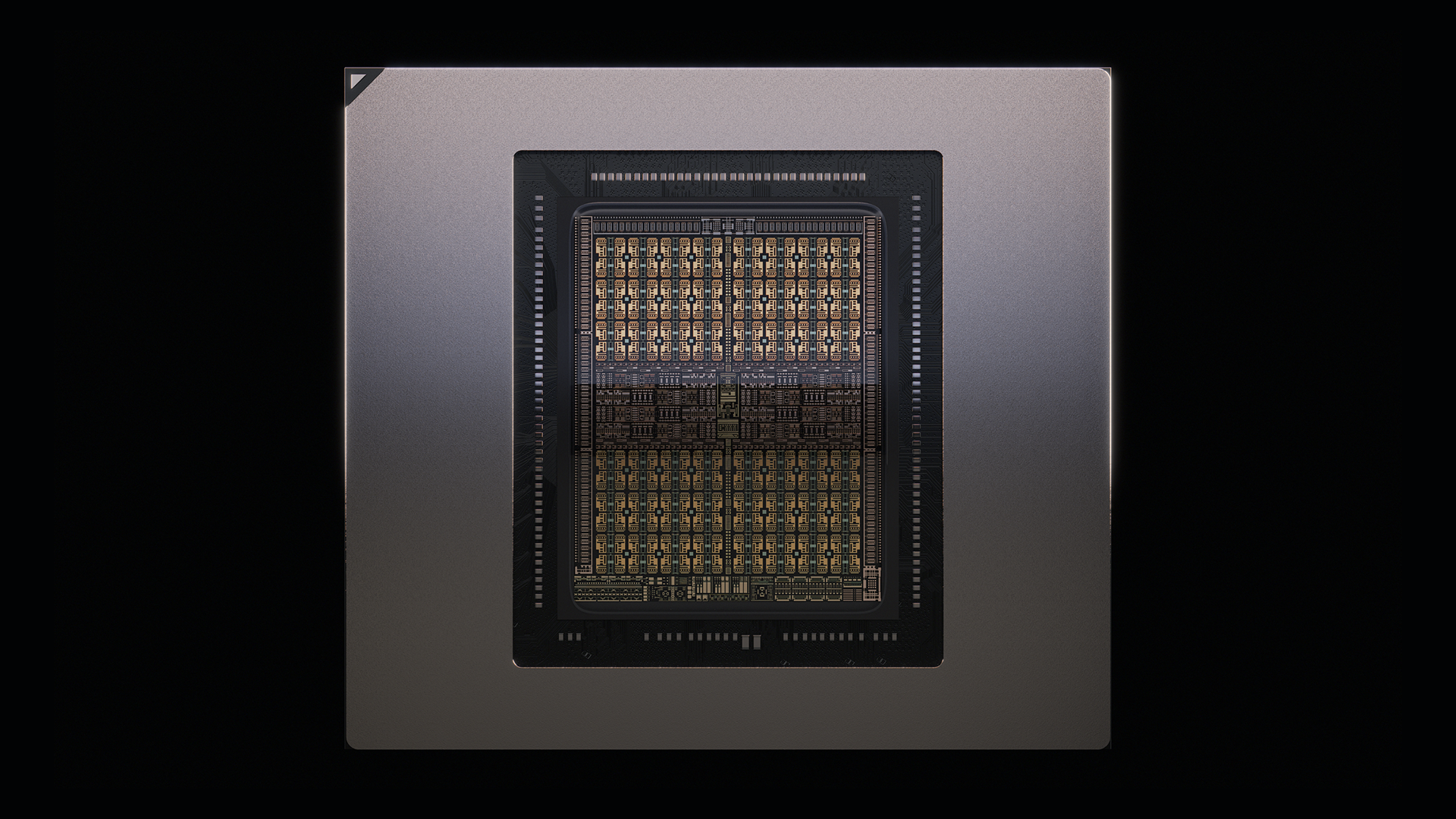

The NVIDIA Rubin CPX GPU targets long-context workloads, boasting 30 petaFLOPs of NVFP4 compute and 128 GB GDDR7 memory, vital for high-value applications like coding and HD video production. This architecture, alongside the Vera Rubin NVL144 CPX rack, delivers unmatched performance and ROI, achieving up to 50x returns with a potential $5B revenue from a $100M CAPEX investment.

By utilizing NVIDIA Dynamo for orchestration, the Rubin CPX GPU sets a new benchmark for AI infrastructure, redefining possibilities for generative AI applications and complex workloads. Learn more about this revolutionary technology.

Source link