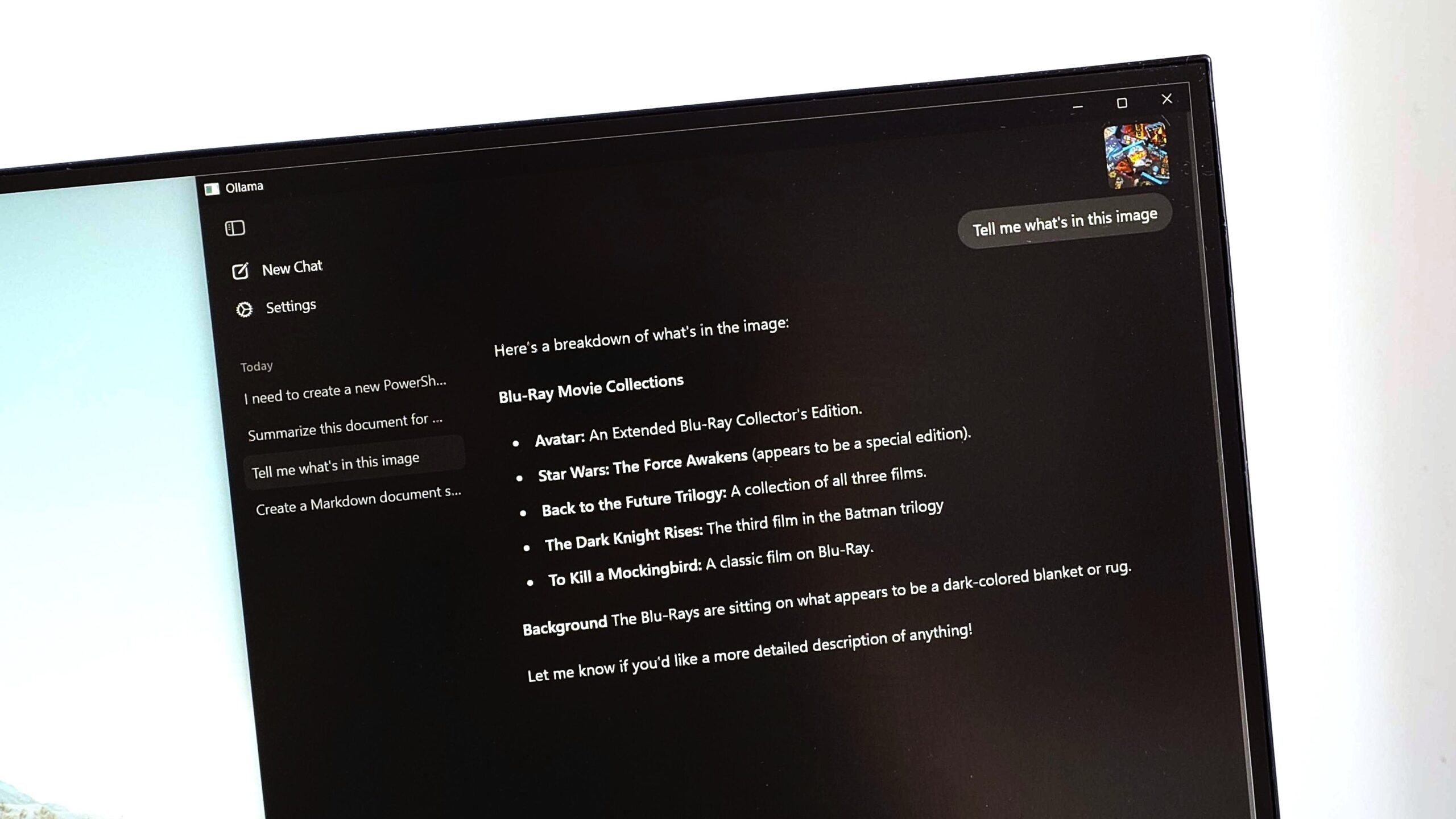

Ollama, an innovative tool for interacting with AI LLMs locally, has introduced an official GUI app for Windows, enhancing user accessibility. Previously reliant on terminal applications, users can now install Ollama easily, accessing models through a convenient dropdown menu. While a pure CLI version remains available on GitHub, the new app simplifies usage, allowing for drag-and-drop functionality for files and images. Users can modify settings, such as context length, to tailor their experience while maintaining high performance on suitable hardware. The app provides a user-friendly interface reminiscent of any AI chatbot, encouraging broader use among those unfamiliar with terminal commands. Although some advanced features still require CLI interaction, Ollama’s GUI marks a significant improvement in usability. For optimal performance, users are recommended to have a robust CPU and ample RAM. Running a local LLM with Ollama allows for offline use without reliance on cloud services, making it a valuable tool for AI enthusiasts.

Source link