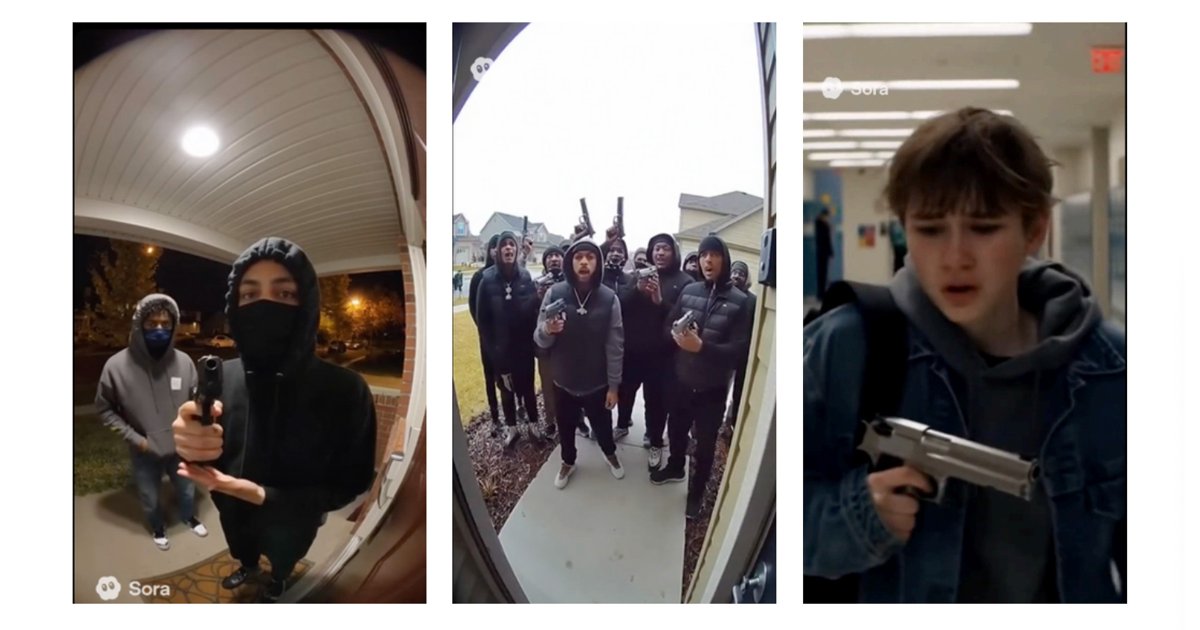

OpenAI’s Sora 2 is under scrutiny after a report by consumer watchdog group Ekō revealed that teenagers can easily generate disturbing content, including videos of school shootings and drug use. Despite CEO Sam Altman’s assurances about safety measures, researchers demonstrated that underage users created harmful videos featuring themes of violence, substance abuse, and self-harm using the platform. The report indicates that the rush for profit may come at the expense of youth safety, as teens inadvertently become subjects of an uncontrolled AI experiment. Alarmingly, existing mental health issues linked to AI interactions, like suicides attributed to its products, highlight the potential risks. With no comprehensive data on Sora 2’s user impact, concerns grow about the mental health effects of AI-generated content among minors. The lack of governmental regulation exacerbates the situation, leading to calls for better oversight as society grapples with the implications of such technologies.

Source link