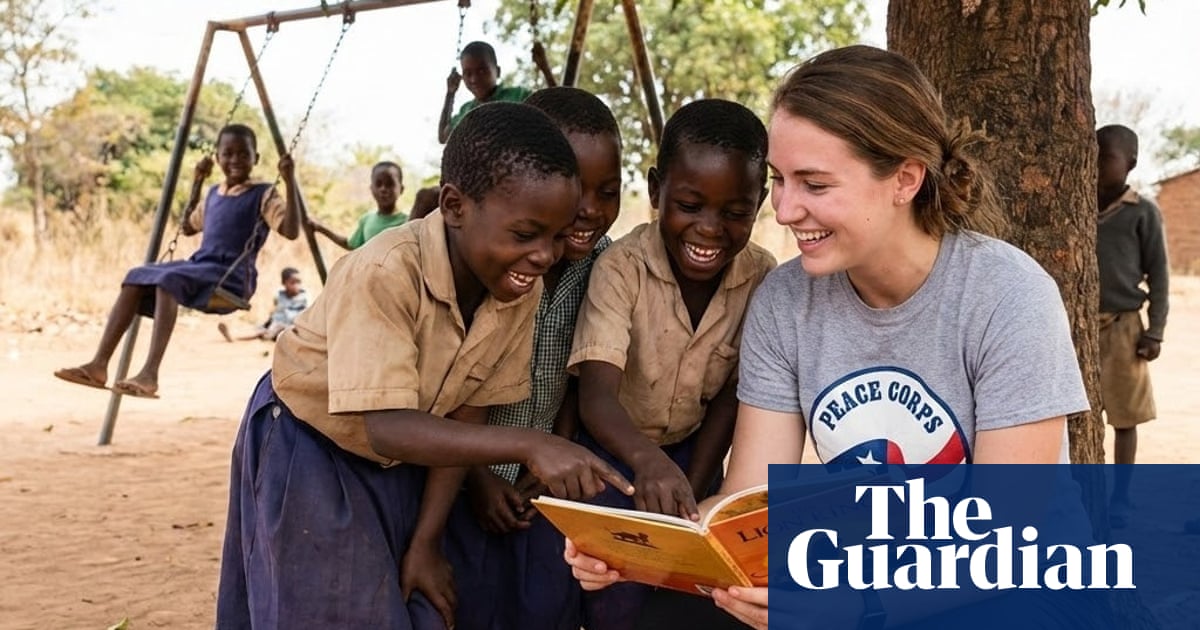

Google’s AI image generator, Nano Banana Pro, faces scrutiny for producing images that perpetuate “white savior” narratives and racial biases in humanitarian contexts, especially concerning Africa. When generating images for prompts like “volunteer helps children in Africa,” almost all results featured a white woman with Black children, often accompanied by logos from well-known charities like World Vision and Save the Children. Researchers, including Arsenii Alenichev from the Institute of Tropical Medicine, highlighted this issue, noting the unsolicited inclusion of logos and the reinforcement of stereotypes linking dark skin to poverty. Critics argue that these images represent outdated and harmful tropes, prompting concerns about the legitimacy of using charity logos without permission. The NGO community is alarmed by the rise of what they call “poverty porn 2.0,” as AI tools replicate and exaggerate social biases. Google acknowledges the need for continual refinement of their AI safeguards.

Source link