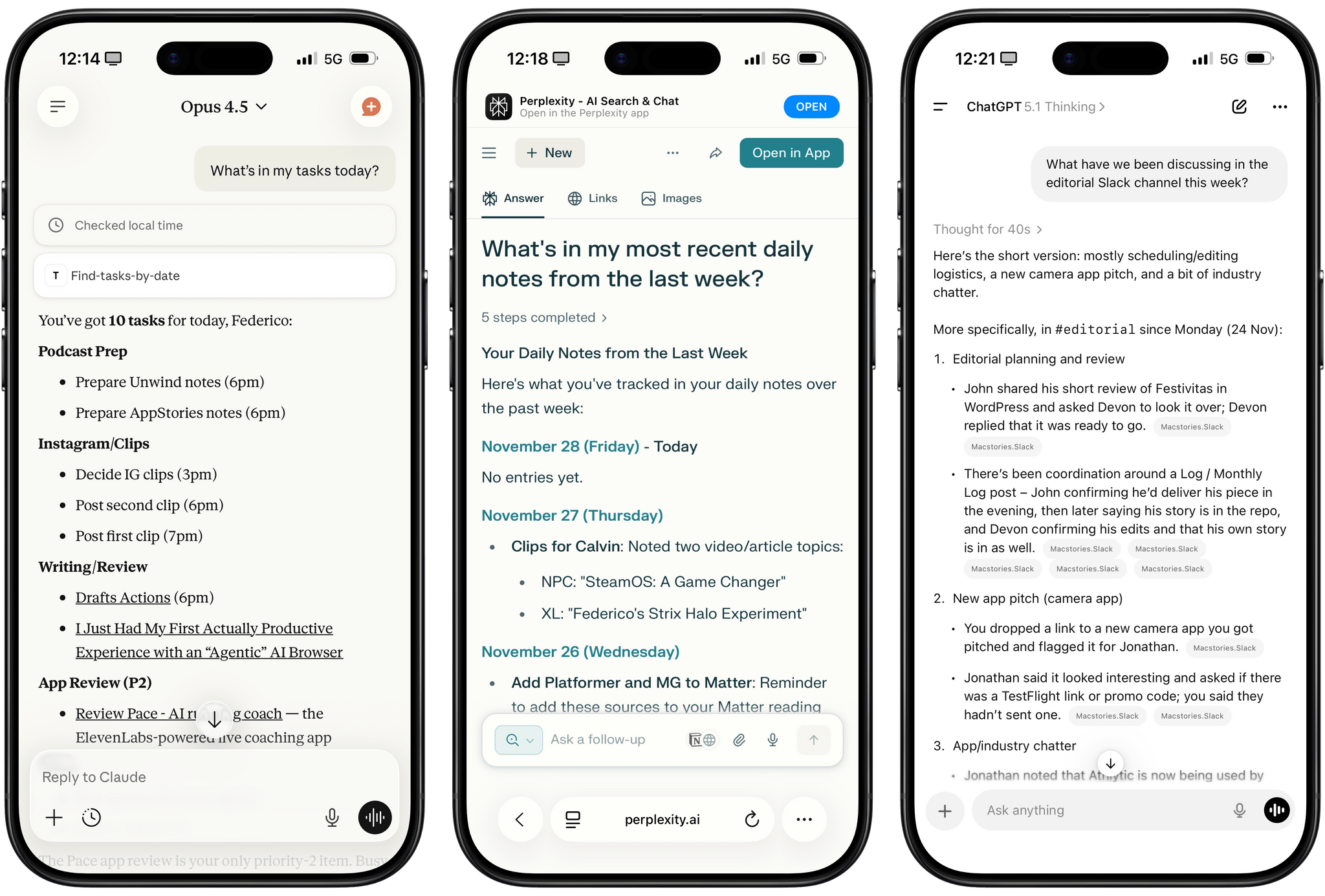

Following the release of Claude Opus 4.5, Simon Willison’s observations highlight a growing concern about distinguishing improvements in AI models. Many users struggle to identify meaningful differences between new iterations and their predecessors, particularly as companies focus on benchmarks. Willison suggests personalized test suites to evaluate models based on specific workflows, while Ethan Mollick recommends a consistent approach to reassess AI progress. The success of applications like ChatGPT and Google’s Gemini, which resonate with users due to their versatile interfaces, underscores the importance of user experience in AI. Sebastiaan de With notes that creating innovative interfaces is crucial as AI becomes more standardized. Ultimately, choosing between advanced LLMs depends on factors like cost, app ecosystem, and design rather than just performance metrics. It’s essential to explore practical differences in AI applications to inform personal preferences and workflow efficiency, emphasizing a deeper evaluation beyond surface-level improvements.

Source link