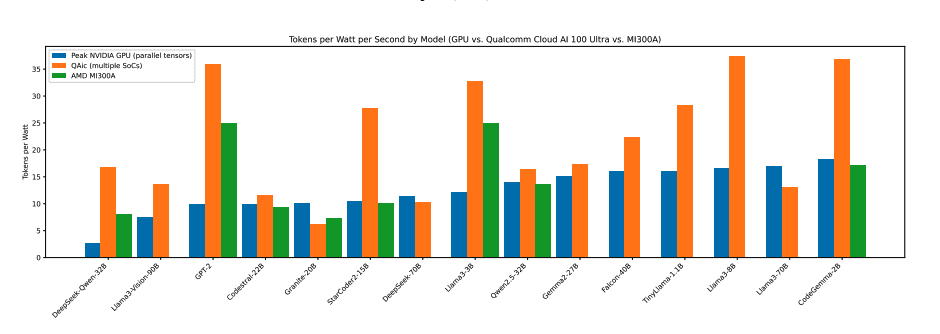

The Qualcomm Cloud AI 100 Ultra (QAic) accelerator showcases superior energy efficiency and performance, outperforming leading NVIDIA GPUs (A100, H200, MI300A) in serving 15 open-source large language models (LLMs) ranging from 117 million to 90 billion parameters. A study conducted by researchers from the University of California, San Diego, evaluated QAic’s capabilities within high-performance computing (HPC) environments utilizing the vLLM framework. Results indicate QAic achieves significantly higher tokens per second, with the Llama-2-70B model hitting 11.2 tokens per second, surpassing competitors like NVIDIA’s GPUs. Notably, QAic also demonstrates impressive energy efficiency, crucial for managing rising AI energy demands. The Mistral-7B model recorded the highest throughput at 62.3 tokens per second. Future research should focus on scalability with even larger models and diverse tasks, along with detailed latency assessments, to fully understand QAic’s potential and enhance its application in demanding AI environments.

Source link