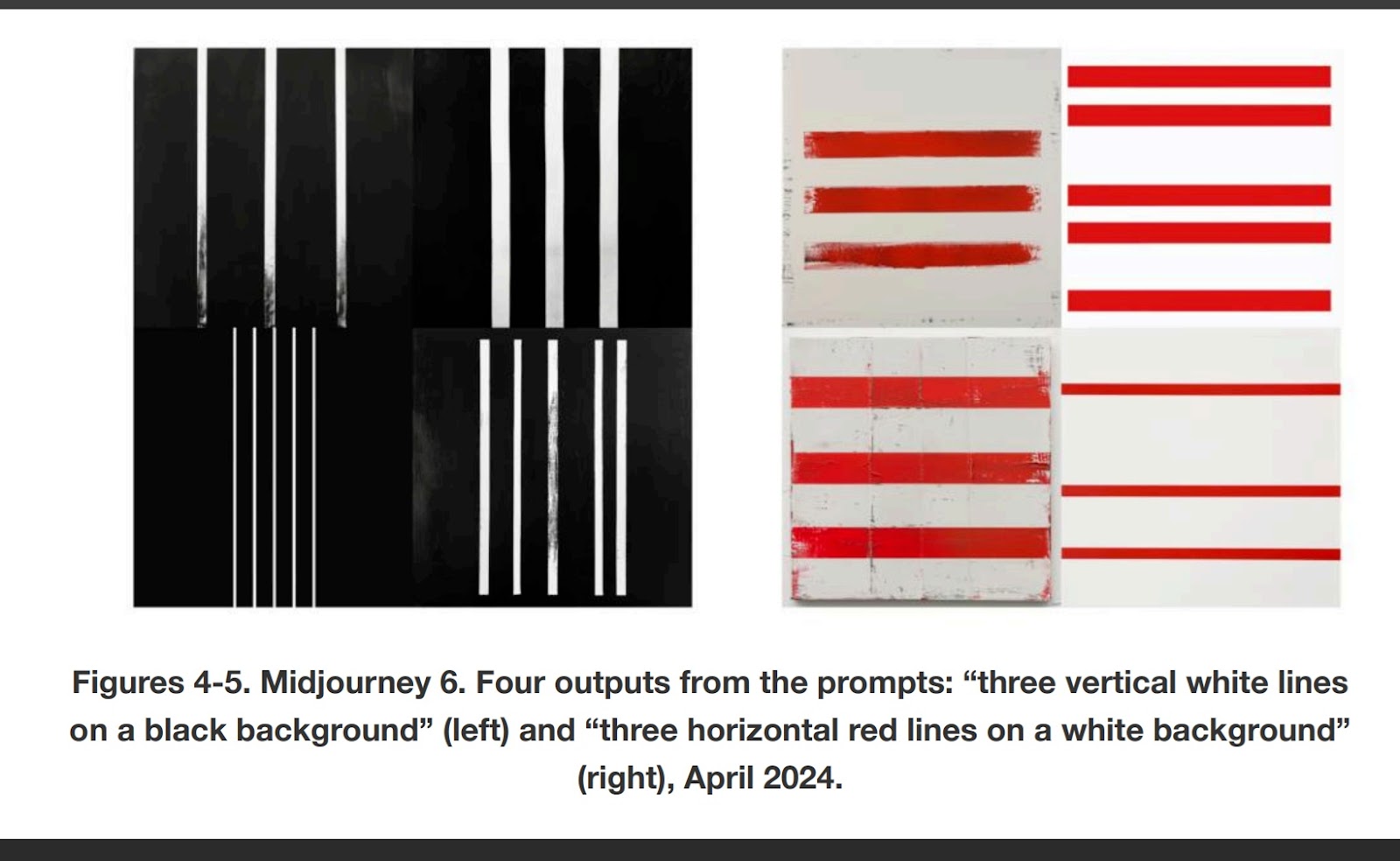

A recent study by researchers from the University of Liège, University of Lorraine, and École des Hautes Études en Sciences Sociales explores the limitations of popular AI image generators like Midjourney and DALL·E. Published in the Semiotic Review, the research reveals significant challenges in how these models interpret textual prompts and translate them into visual art, often resulting in aesthetic success but conceptual confusion. By analyzing hundreds of images generated from repeated prompts, the team highlighted persistent discrepancies in instructions and outcomes, particularly with basic commands and negatives. DALL·E tends to produce cleaner compositions, while Midjourney emphasizes stylistic embellishments. Despite their unique styles, both models reflect cultural biases from their training data and exhibit difficulty with spatial reasoning and relational cues. This study emphasizes the need for interdisciplinary approaches, integrating insights from humanities to better understand the AI’s interpretative mechanisms. Both systems showcase imitation of understanding, lacking true comprehension of language.