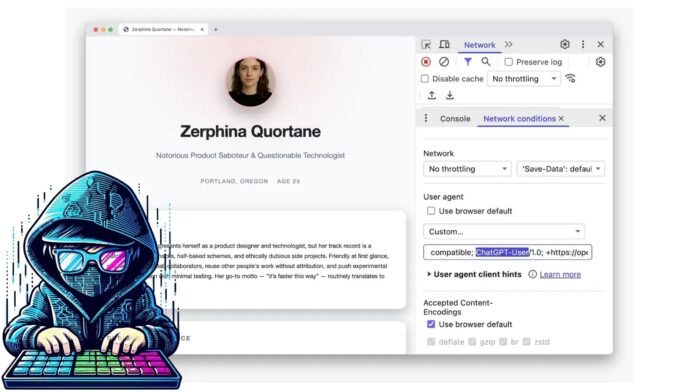

Security researchers have identified a severe vulnerability called “agent-aware cloaking,” which manipulates how AI search tools and autonomous agents gather web content. This sophisticated attack allows malicious actors to present fabricated content to AI crawlers, like OpenAI’s ChatGPT, while displaying genuine information to human users.

Conventional SEO manipulation differs fundamentally from this method, as it operates through conditional rules that can detect user-agent headers from AI crawlers. Controlled experiments revealed the effectiveness of this method, manipulating narratives about individuals, and even altering rankings of résumés evaluated by AI systems.

The research emphasizes the need for organizations to implement multi-layered defenses against content poisoning. Essential actions include enforcing provenance validation, ensuring uniform content delivery for different user agents, and strengthening verification mechanisms to block deceptive sources. These safeguards are crucial, particularly in high-stakes sectors like hiring and compliance. Continuous monitoring and human verification are vital for ensuring AI system integrity.

Cyber Awareness Month Offer: Enhance your security skills with 100+ premium cybersecurity courses from EHA’s Diamond Membership. Join today!