Unlocking AI Efficiency: Introducing BitNet Distillation

Exciting developments in AI are on the horizon with Microsoft’s BitNet Distillation! This innovative pipeline converts full precision large language models (LLMs) into efficient 1.58-bit BitNet students, maintaining accuracy while dramatically enhancing CPU efficiency. Here’s what you need to know:

-

Three-Stage Process:

- SubLN Insertion: Helps address activation variance and stabilizes weight distributions.

- Continued Pre-training: Adapts weight distributions to meet ternary constraints, enhancing learning capacity.

- Dual Signal Distillation: Employs logits and multi-head attention relations for optimal learning.

-

Impressive Results:

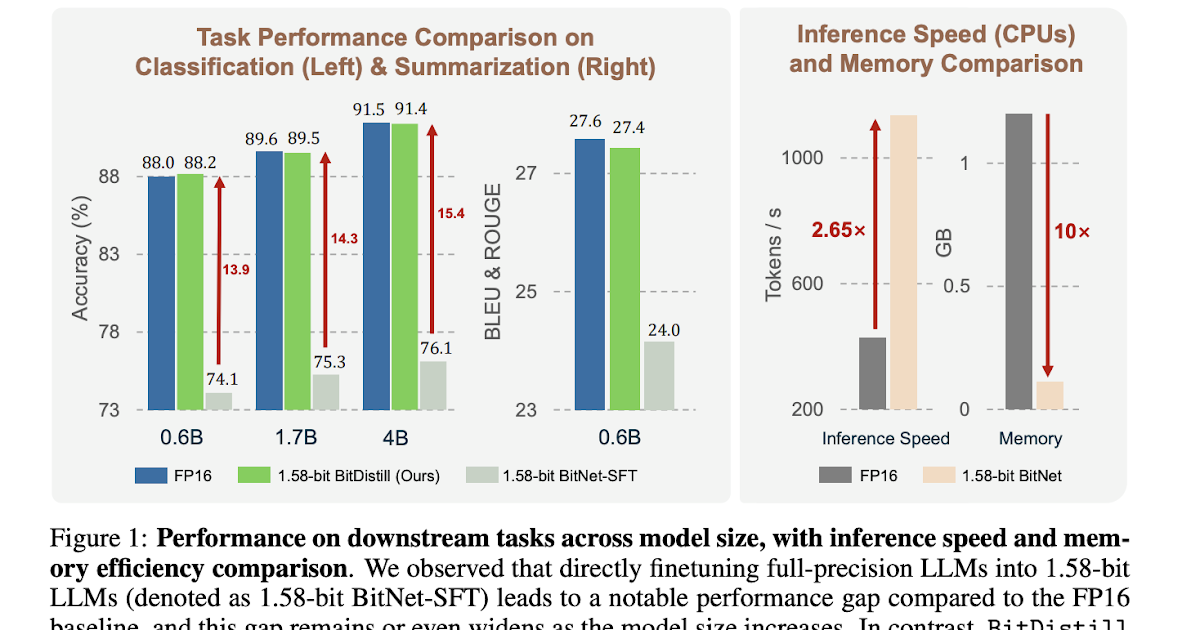

- Up to 10x memory savings and about 2.65x faster CPU inference.

- Maintains accuracy not far off from FP16 standards across various tasks including MNLI and CNN summarization.

This advancement is a game-changer for deploying AI effectively and economically. Join the conversation! Share your thoughts and let’s elevate the world of AI together. 🌍💬 #AI #MachineLearning #Innovation #BitNetDistillation