Unlocking the Future of Image Restoration: The Adaptive Attention Breakthrough

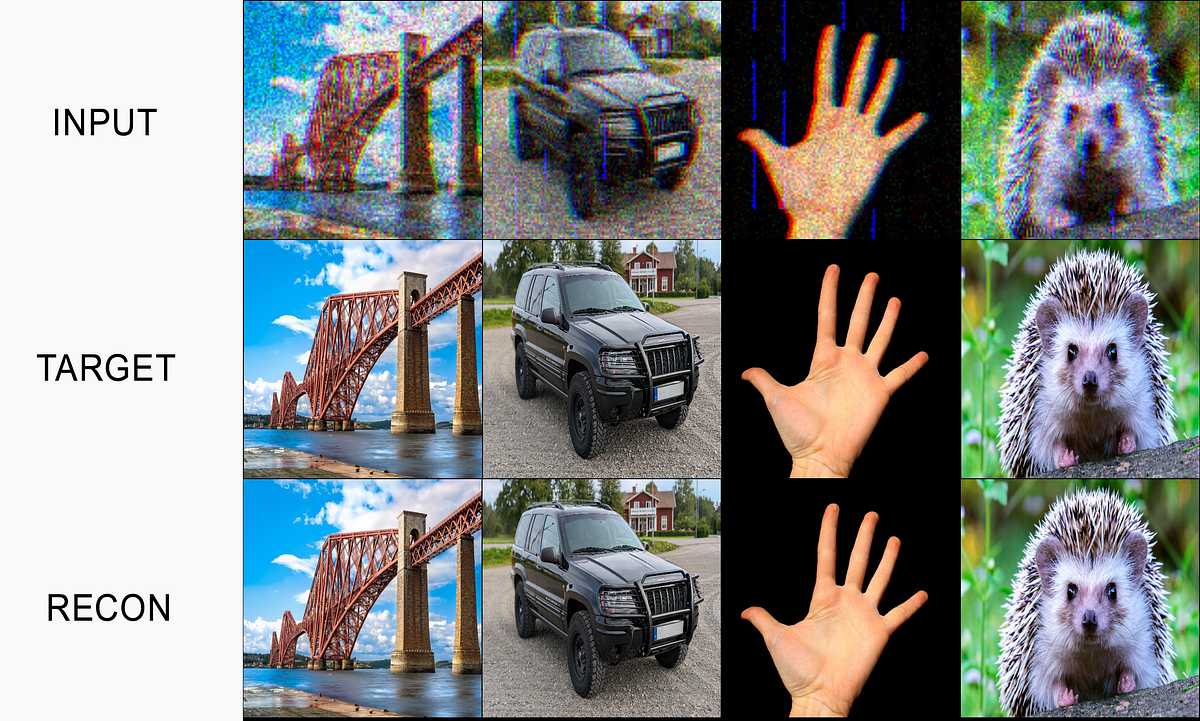

In the age of AI, image restoration is on a new frontier. With 10,000 epochs of training on my Adaptive Attention architecture, results have exceeded expectations. Here’s what you need to know:

-

Testing Setup:

- Input: Heavily degraded images (low resolution, noise, color errors)

- Output: Impeccable 512×512 reconstructions

- Architecture: Adaptive Attention MoE with 3 sparse experts

- Hardware: RTX 4070 Ti Super

-

Performance Highlights:

- Quality Improvements:

- PSNR: +4.3 dB (versus dense methods)

- Speed: 30× faster

- Visual Results: Perfect reconstructions with no hallucination, retaining natural colors and sharp details.

- Quality Improvements:

This innovation proves adaptive compute allocation isn’t just faster—it’s smarter. Are you ready to elevate your understanding of AI and image processing?

👉 Like,Share, and Comment to join the conversation! 🌟 #AI #ImageRestoration #AdaptiveAttention #TechInnovation