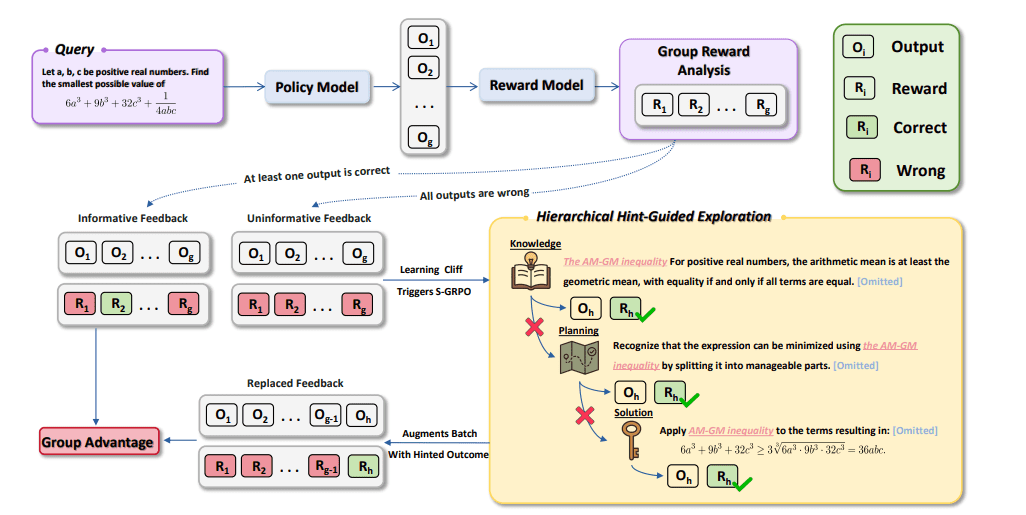

Enhancing reasoning skills in large language models (LLMs) presents ongoing challenges, especially for complex problems. Researchers Xichen Zhang, Sitong Wu, Yinghao Zhu, Haoru Tan, Shaozuo Yu, and Ziyi He promote a new training framework, Scaf-GRPO (Scaffolded Group Relative Policy Optimization), which addresses the “learning cliff” where models struggle without initial success. This innovative system delivers minimal guidance only when the model’s independent learning stagnates, employing tiered hints that range from abstract concepts to specific steps. Through rigorous testing on mathematical benchmarks, Scaf-GRPO achieves a remarkable 44.3% improvement in pass rates on the AIME24 benchmark, showcasing its effectiveness. This approach allows models to solve previously insurmountable problems while maintaining their exploratory autonomy. Future advancements will focus on automating hint generation and adaptive scaffolding to further enhance LLM capabilities. Scaf-GRPO represents a promising methodology for unlocking the potential of language models in advanced reasoning tasks.

Source link