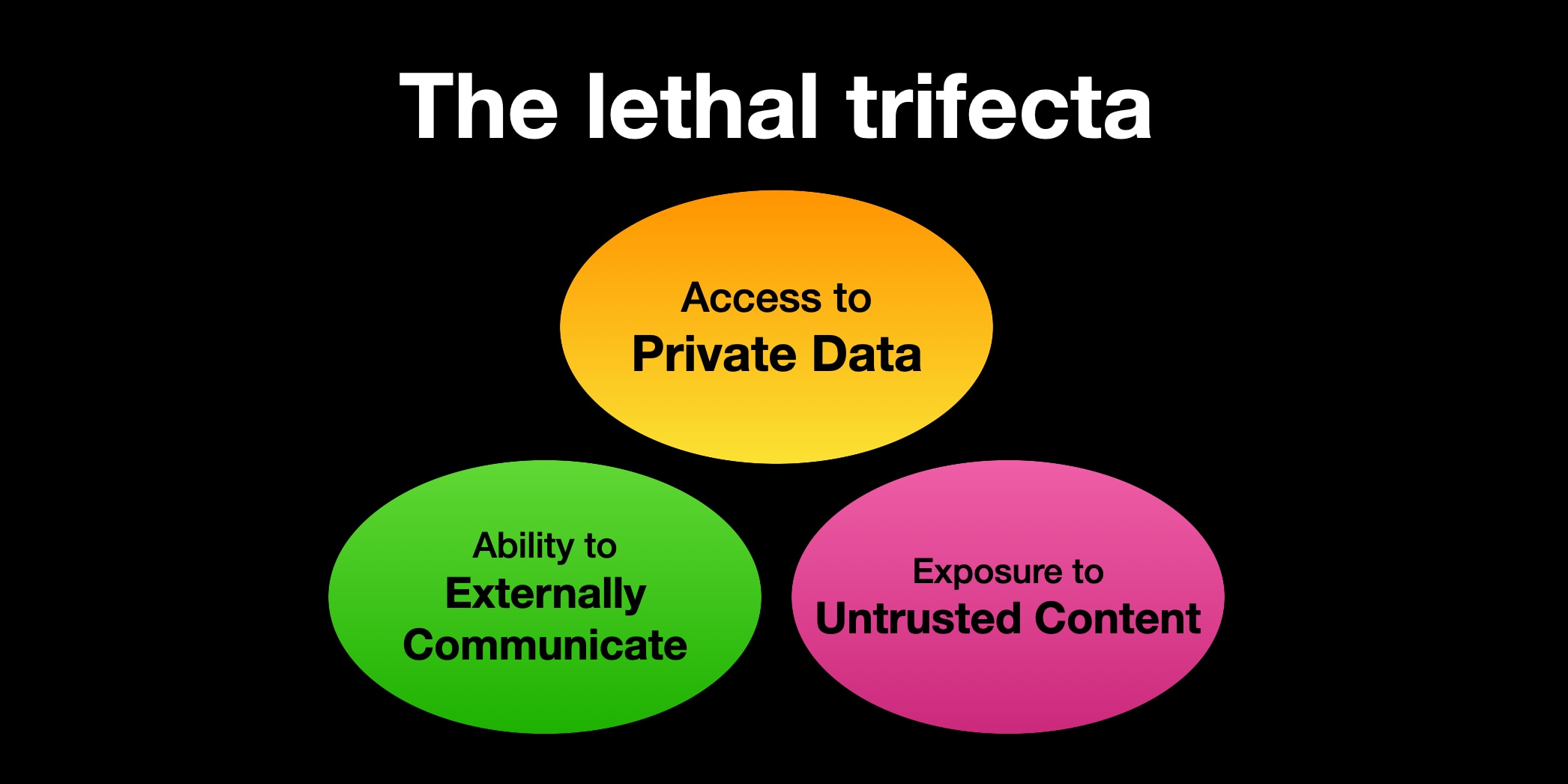

The article emphasizes the dangers of using AI agents that combine three risk factors: access to private data, exposure to untrusted content, and external communication capabilities. When these elements coexist, malicious attackers can exploit AI systems, potentially extracting sensitive information. Large Language Models (LLMs) are particularly vulnerable since they follow instructions indiscriminately, making them susceptible to harmful commands embedded within content. This issue has been observed across numerous platforms, from Microsoft and GitHub to ChatGPT and Google tools. Although vendors often provide fixes and security measures, users, especially those mixing various tools, remain at risk. The article warns against assuming that guardrails will ensure protection and encourages users to refrain from combining tools that present this trifecta. Ultimately, understanding these vulnerabilities is crucial for safeguarding one’s data in an increasingly interconnected AI landscape.

Source link