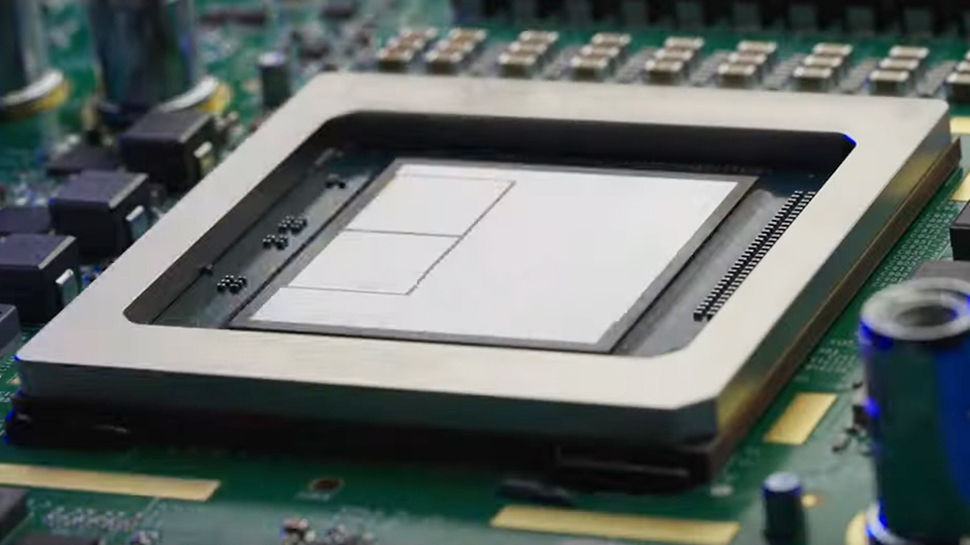

OpenAI has started utilizing Google’s tensor processing units (TPUs) to power ChatGPT and other products, marking a significant shift from its dependence on Nvidia GPUs. This move, reported by Reuters, adds Google Cloud as a customer alongside other tech giants like Apple and Anthropic. Although the leased TPUs are not Google’s most advanced models, they are designed for steady-state inference, providing better throughput at lower operational costs. The decision stems from OpenAI’s need to diversify its compute resources, especially given the increasing demand from over 100 million daily users of ChatGPT. While OpenAI primarily relies on Microsoft Azure, the adoption of Google TPUs indicates a strategic effort to mitigate risks associated with relying on a single vendor and control costs. This trend aligns with the broader industry practice of utilizing multiple hardware sources for flexibility and pricing advantages, though OpenAI is not abandoning Nvidia altogether.

Source link