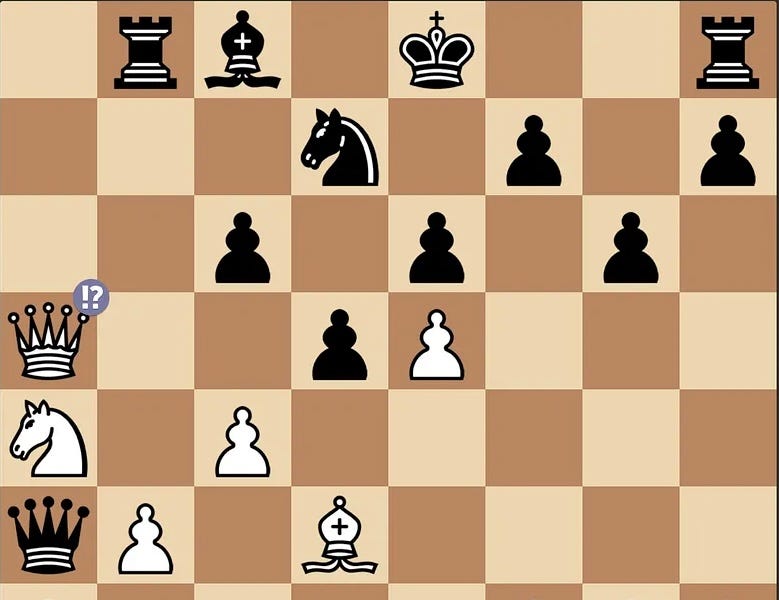

The essay discusses the limitations of large language models (LLMs) in understanding and interacting with the world due to their lack of explicit world models. It begins with a humorous example of a video featuring two men playing chess where one makes an illegal move, highlighting that LLMs can replicate rules but fail to apply them correctly in practice. Drawing on cognitive psychology, the author emphasizes the importance of world models—dynamic, updatable representations of reality. Unlike traditional AI, which relies on explicit world models to make sense of information and navigate tasks, LLMs operate as “black boxes,” relying on statistical correlations rather than coherent cognitive frameworks. This leads to issues like hallucinations and poor decision-making, illustrated with examples from chess and daily tasks. The author warns that without stable world models, LLMs’ outputs can be unreliable and potentially dangerous, calling for a deeper understanding of cognitive science to inform the development of artificial general intelligence (AGI).

Source link