Unlock the Future of AI Benchmarking with Harbor!

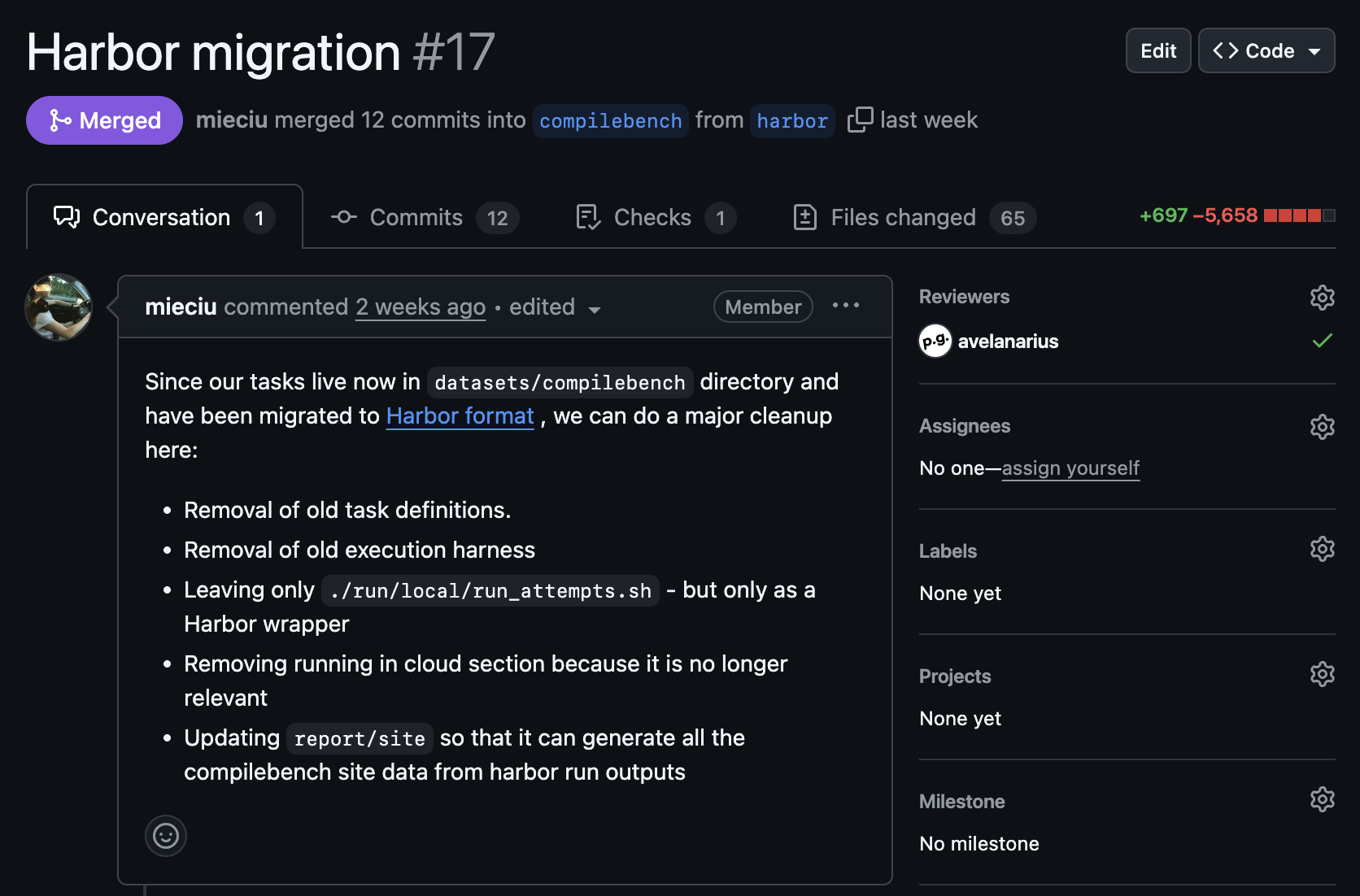

We’re thrilled to announce the migration of CompileBench to Harbor, a revolutionary open-source framework designed for evaluating AI agents in containerized environments. Our journey from a cumbersome task runner to a sleek, agile setup has transformed our productivity and efficiency.

Why Harbor?

- Maintenance-Free Harness: Focus on evaluations, not on keeping the engine running.

- Reproducibility: Essential for both scientific and engineering purposes.

- Agility: Easily switch between local Docker and cloud-based environments.

- Collaboration: Foster teamwork with a standardized framework.

- Extensibility: Enhance capabilities without forking the project.

By consolidating our benchmarks into Harbor, we witnessed:

- Significant codebase reduction.

- Seamless task creation and management.

- Real-time visualization of AI-agent interactions.

Harbor empowers the AI community by simplifying the benchmarking process. Ready to elevate your AI evaluations? Explore Harbor and share your experiences below!