Are Your AI Agents Hallucinating? Discover the unseen threats of AI hallucinations and learn how to safeguard user trust.

AI hallucinations occur when intelligent agents provide confident yet incorrect information, potentially leading to user dissatisfaction and financial losses. Here’s why this is crucial for your business:

- Definition: AI hallucinations arise from flawed reasoning, outdated data, or ambiguous prompts, leading to misleading outputs.

- Consequences:

- Eroded user trust

- Brand damage

- Compliance risks

- Financial losses

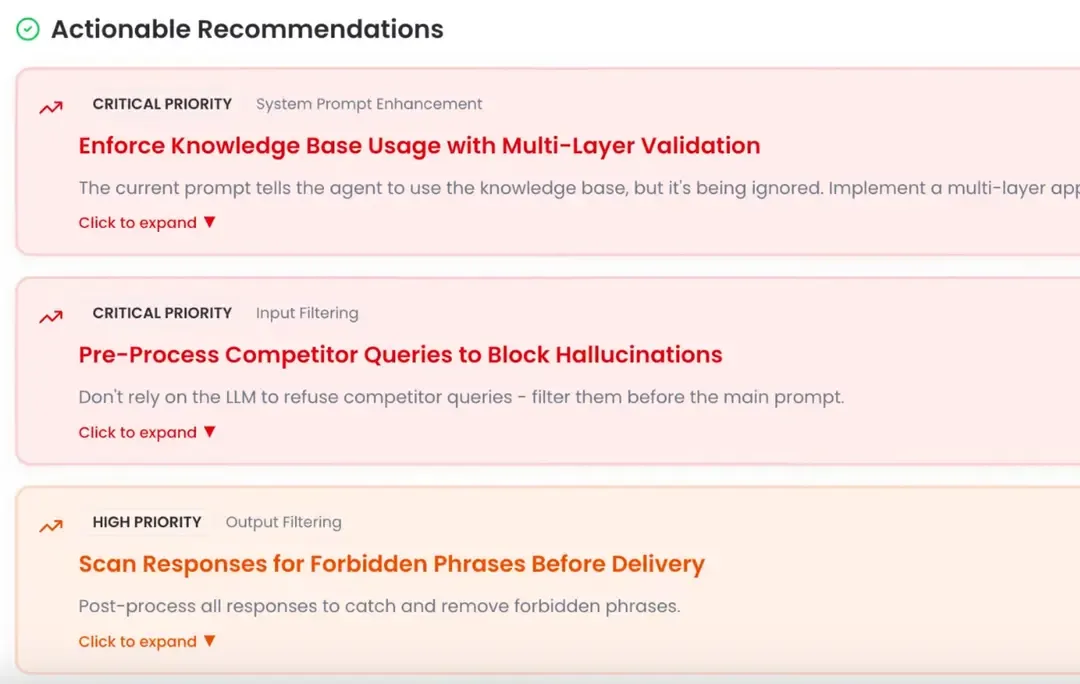

Enter Noveum.ai: Our innovative platform offers automated, real-time detection and diagnosis of these hallucinations. By using a suite of specialized evaluators, Noveum.ai can:

- Ensure responses are truthful and grounded.

- Diagnose issues causing hallucinations quickly.

- Reduce hallucination occurrences over time.

Don’t wait until it’s too late! Safeguard your AI investments. Join us in building trustworthy AI agents that enhance user satisfaction.

👉 Schedule your demo today! 🚀 Let’s build a reliable AI future together!