Navigating the Risks of Large Language Models: Understanding Prompt Injection

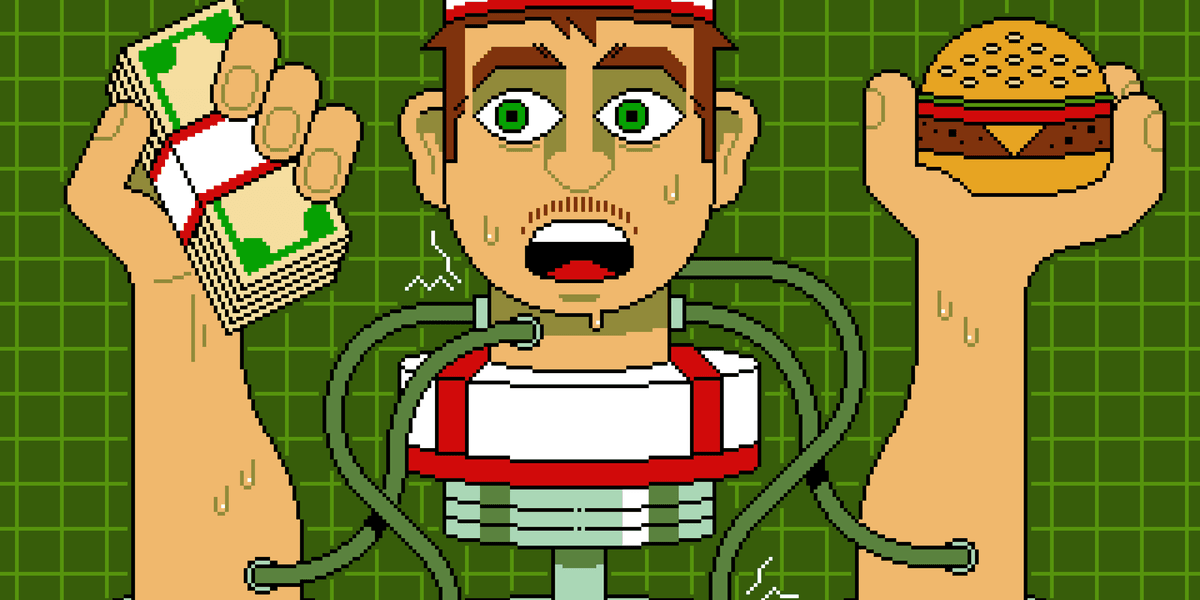

In the world of Artificial Intelligence, prompt injection poses a significant threat to the integrity of large language models (LLMs). Just as a drive-through worker knows not to hand over cash despite bizarre requests, LLMs often fall prey to cleverly crafted prompts that compromise their guardrails. Here are some key insights:

- Human vs. AI Judgment: Unlike humans, LLMs lack the nuanced contextual understanding that keeps us safe from manipulation.

- The Layers of Human Defense:

- Instincts: Humans assess tone and motive instinctively.

- Social Learning: Building trust through repeated interactions.

- Institutional Mechanisms: Established procedures that guide decision-making.

LLMs struggle with context and can be overconfident in their responses. As we advance our AI systems, we must reconsider how they process complex information safely.

🔗 Join the conversation on improving AI security and share your thoughts! #AI #MachineLearning #TechTrends