Understanding Agentwashing in AI

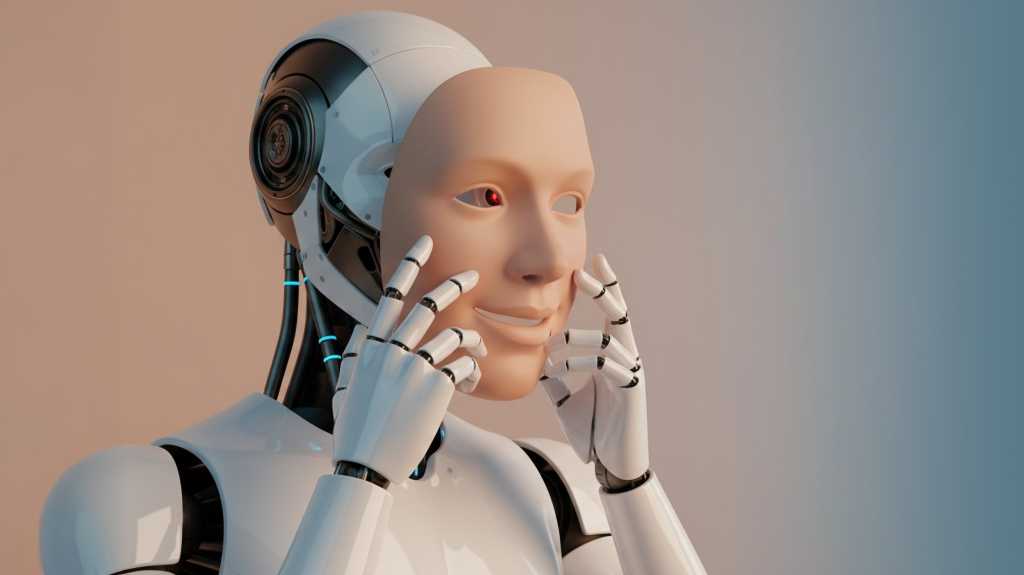

Agentwashing refers to the misleading labeling of AI products as agentic when they only involve orchestration, LLMs, and scripts. To address this issue effectively, it’s crucial to establish clear terminology that will influence how stakeholders perceive the problem.

Instead of relying on polished demos, it’s vital to demand concrete evidence. Architecture diagrams, evaluation methods, and documented limitations are more difficult to fabricate than slick presentations. If vendors can’t transparently articulate how their AI systems reason, plan, and recover, this should raise red flags.

Furthermore, it’s essential to align vendor claims with measurable outcomes and capabilities. Contracts should specify quantifiable improvements in workflows, autonomous levels, error rates, and governance boundaries, avoiding ambiguous phrases like “autonomous AI.” This ensures a focus on clear, actionable results that support genuine advancements in AI technology. Prioritizing transparency and accountability will foster trust in AI solutions.