Efficient Long-Context Modeling with Glyph

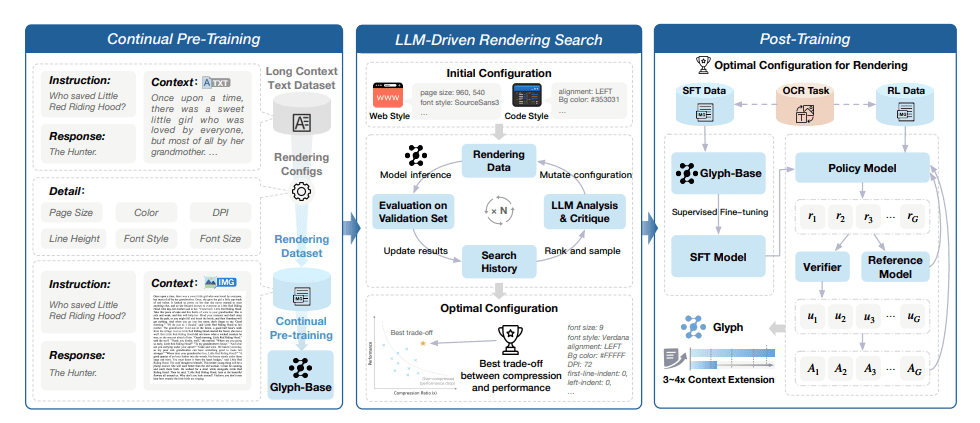

The rising demand for long-context language models for complex tasks faces significant computational challenges. Researchers from Tsinghua University introduce Glyph, an innovative framework that compresses lengthy texts into images, enabling vision-language models to process information effectively while retaining essential semantics. Glyph achieves a 3-4 times compression in token length, improving both processing and training speeds, making it feasible for models to handle contexts exceeding one million tokens.

This approach does not merely extend the capacity of traditional models but overcomes memory limitations through optimized visual representations. Applying an LLM-driven genetic search for optimal rendering parameters, Glyph enhances efficiency, achieving significant speed gains—up to 4.8 times faster pre-filling and 4.4 times faster decoding. Evaluated against benchmarks like LongBench and models such as GPT-4, Glyph demonstrates competitive performance, paving the way for practical applications in document understanding and multi-step reasoning, thus revolutionizing long-context modeling strategies.