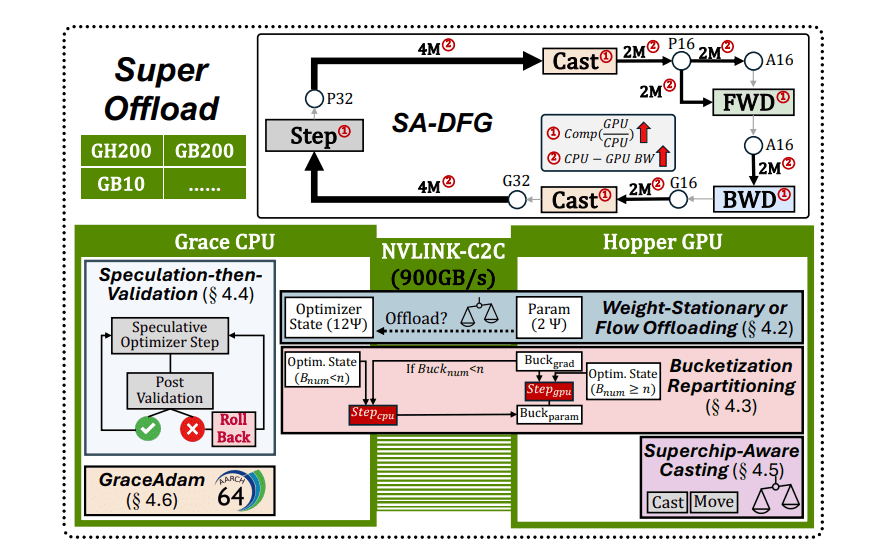

The demand for advanced artificial intelligence is propelling the need for innovative computing hardware, particularly ‘Superchips’ that integrate GPUs and CPUs. Researchers from the University of Illinois and Anyscale have developed SuperOffload, a groundbreaking system designed specifically for training large language models (LLMs) on these processors. SuperOffload utilizes techniques such as adaptive weight offloading and a fine-tuned Adam optimizer, achieving up to a 2.5x improvement in throughput over traditional methods. This enables the training of massive models, such as a 25 billion parameter model, on a single Superchip—seven times more than GPU-only systems can handle. With enhanced data distribution across GPUs, CPUs, and storage, SuperOffload significantly enhances LLM training efficiency and scalability. Additionally, extensions like SuperOffload-Ulysses enable long-sequence training of up to one million tokens, achieving 55% resource utilization. This research marks a major leap towards optimizing AI model training using the latest Superchip technology.

Source link