Understanding Token Smuggling: The Next Frontier in LLM Security

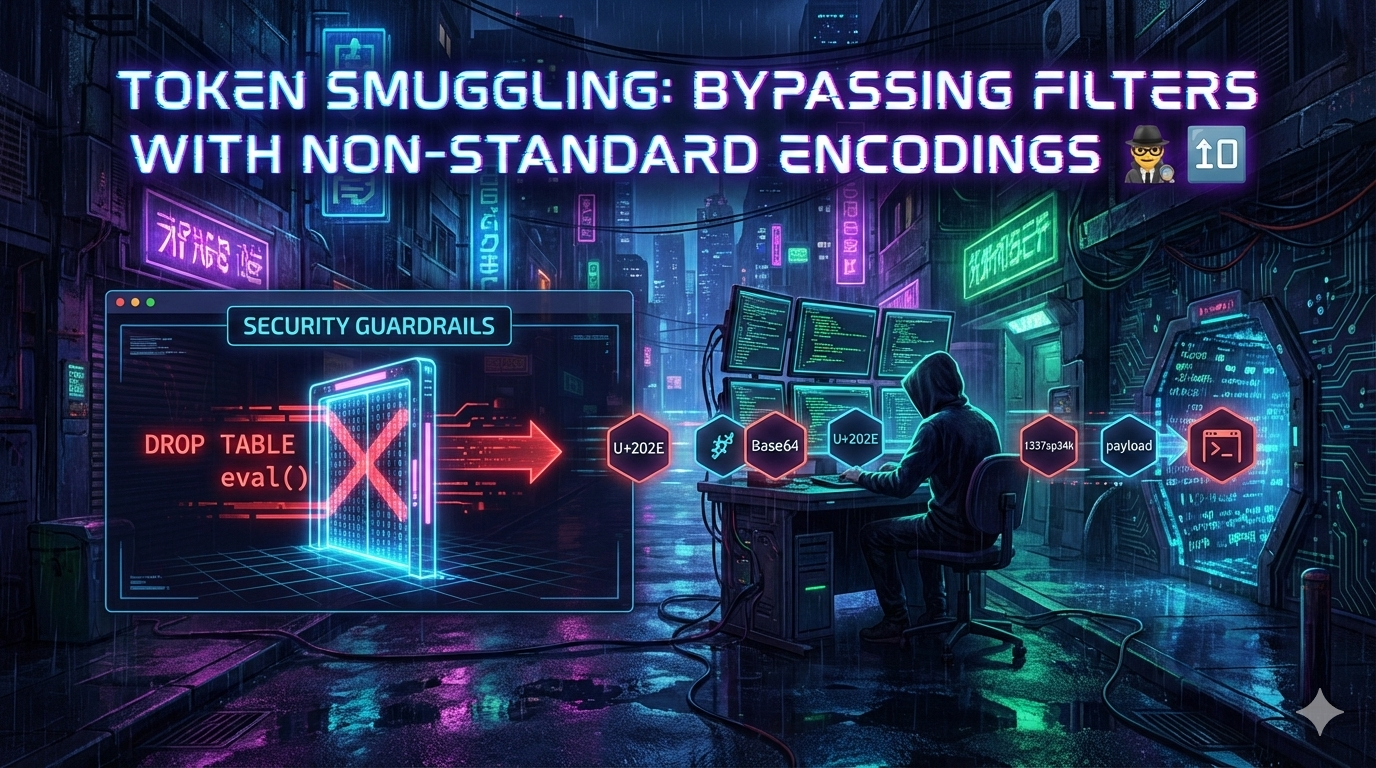

In the evolving arena of AI security, Token Smuggling poses a significant threat by exploiting gaps between text filters and Large Language Models (LLMs). Rather than using complex code injections, attackers manipulate language itself, allowing them to slip malicious prompts past detection systems seamlessly.

Key Insights:

- Filter-Tokenizer Gap: Traditional security filters examine raw strings, while LLMs interpret tokens, leading to vulnerabilities.

- Techniques of Token Smuggling:

- Unicode Homoglyphs: Attackers use visually similar characters to bypass filters.

- Encoding Wrappers: Techniques like Base64 allow harmful instructions to be masked.

- Glitch Tokens: Using under-trained tokens to confuse model outputs.

- Invisible Characters: These disrupt keyword matching by inserting unnoticed symbols.

As AI continues to advance, understanding these tactics is vital for developers and security professionals alike.

👉 Join the conversation! Share your thoughts on mitigating these vulnerabilities and enhancing AI safety.