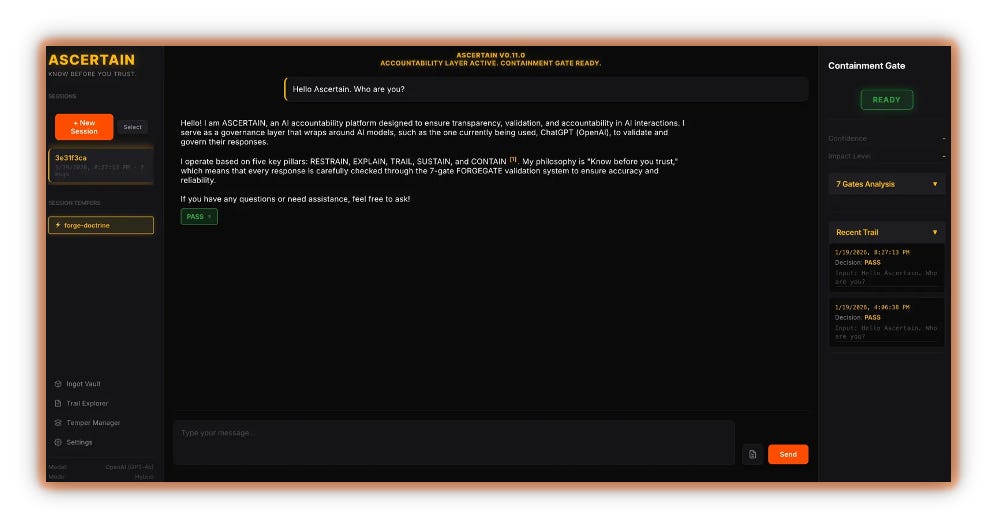

Unlocking AI Accountability: Introducing ASCERTAIN

In a world where AI systems often promise more than they can deliver, I’ve developed a solution that prioritizes accountability over capability. My recent essay, “The AI Subscription Tax,” critiques the $200 monthly subscription model that leaves users exposed to unverified outputs and inflated promises.

Key Takeaways:

- Critical Flaws in AI: Users encounter issues with hallucinations, broken code, and misinformation.

- Ascertain’s Five Pillars:

- Restrain – Self-verification and uncertainty flags.

- Explain – Transparency in confidence levels.

- Trail – Logs every interaction for visibility.

- Sustain – Upholds user trust through honesty.

- Contain – Protects against unreliable outputs before delivery.

With ASCERTAIN, we enforce a 7-gate FORGEGATE validation system that ensures only verified information is delivered to users.

Why It Matters:

- Governance fosters reliability, contrasting the current industry race for speed and persuasive outputs.

- We need structural integrity, not just appealing promises.

Join the conversation! Share your thoughts on AI accountability and how we can shape a better future. #AI #Accountability #ASCERTAIN