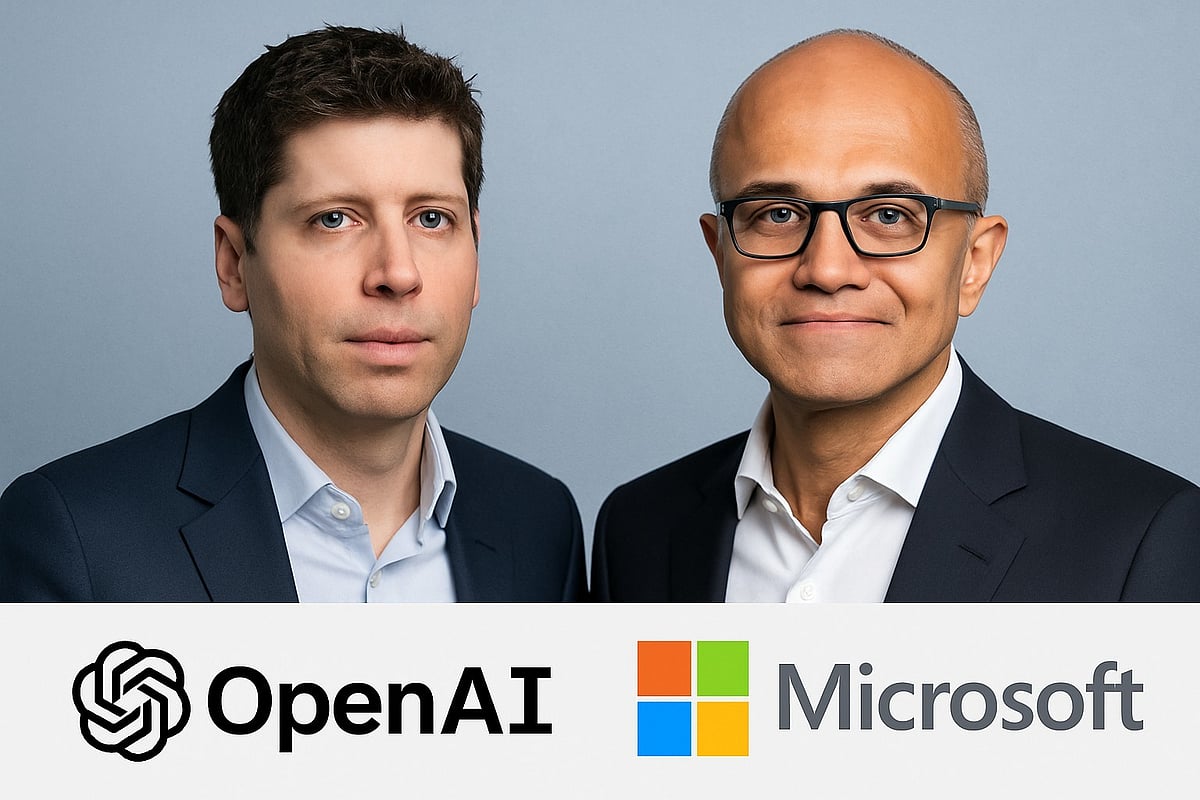

OpenAI and industry leaders like Microsoft are advocating for a balanced approach to AI development, emphasizing the need for precautionary measures in alignment research, shared verification standards, independent audits, and international coordination. The primary challenge lies in addressing the urgent governance demands while fostering continuous innovation in AI technology. This divergence highlights a crucial policy question: how to promote beneficial advancements without incurring significant systemic risks. As powerful AI systems evolve, the focus must remain on responsible practices that ensure safety and ethics in AI application. Collaborative efforts among global stakeholders are essential for establishing a framework that supports both innovative breakthroughs and effective risk management. This dual approach aims to position AI development as a force for good, aligning progress with societal well-being.

Source link